Apple’s ToolSandbox Exposes the Glaring Gap: Open-Source AI Trails Behind Proprietary Models

Most people like

Transform your creative process with our innovative transcription platform designed specifically for content creators. Streamline your workflow, enhance accessibility, and elevate your projects by converting audio and video into precise, searchable text. Discover the tools you need to create engaging content effortlessly.

Transform the way your organization communicates with our cutting-edge AI presentation software designed for enterprise business needs.

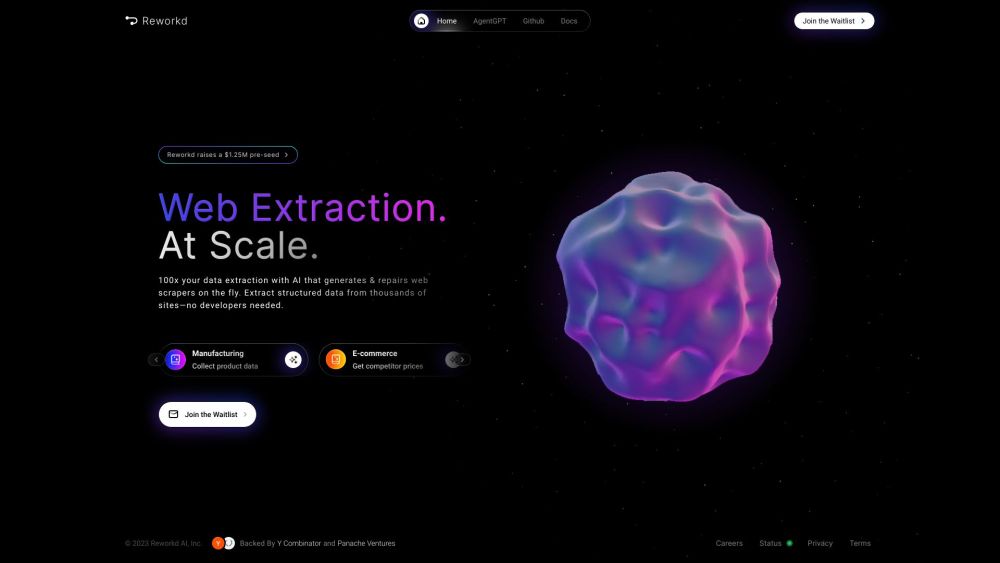

Introduction to AI Agents for Web Data Extraction

In the era of big data, extracting valuable information from the web has become essential for businesses and researchers alike. AI agents are revolutionizing this process by automating web data extraction, enabling users to gather insights efficiently and accurately. By harnessing advanced algorithms and machine learning techniques, these intelligent agents streamline the task of sifting through vast amounts of online information, transforming raw data into actionable intelligence. Explore how AI agents are changing the landscape of web data extraction and the numerous benefits they offer to organizations in today’s digital world.

The Centralized Insights Platform serves as a comprehensive hub for aggregating and analyzing critical data from various sources. By streamlining information into a singular location, it empowers organizations to make informed decisions swiftly and efficiently. This platform enhances collaboration among teams, fosters data accessibility, and drives strategic initiatives, ensuring that actionable insights are always at your fingertips. Discover how our Centralized Insights Platform revolutionizes data management and transforms your organizational workflow.

Find AI tools in YBX

Related Articles

Refresh Articles