Anthropic Unveils New Tool to Combat Malicious Users on Claude Chatbot

Most people like

As we embrace the digital age, automation is transforming industries and enhancing productivity like never before. This evolution not only streamlines processes but also paves the way for innovative solutions that drive growth. In this rapidly changing landscape, understanding the pivotal role of automation is essential for businesses looking to thrive. Join us as we explore the cutting-edge technologies and strategies that are shaping the future of automation.

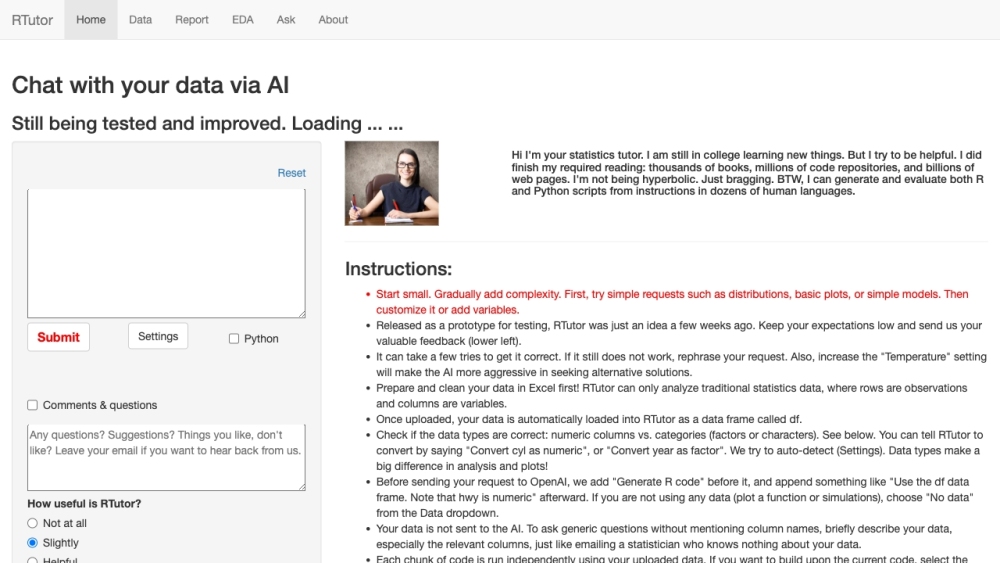

RTutor is an innovative AI-driven application designed for effortless data analysis through natural language processing.

Unlock the power of creativity with our guide to transforming text into breathtaking digital art. Discover how to harness innovative tools and techniques that allow you to turn words into mesmerizing visuals. Whether you're an aspiring artist or a seasoned pro, learn how to elevate your artwork by creating stunning digital designs straight from your imagination.

Find AI tools in YBX