Bilibili Releases Open-Source Lightweight Index-1.9B Series Models: Base, Control, Dialogue, and Role-Playing Versions Available

Most people like

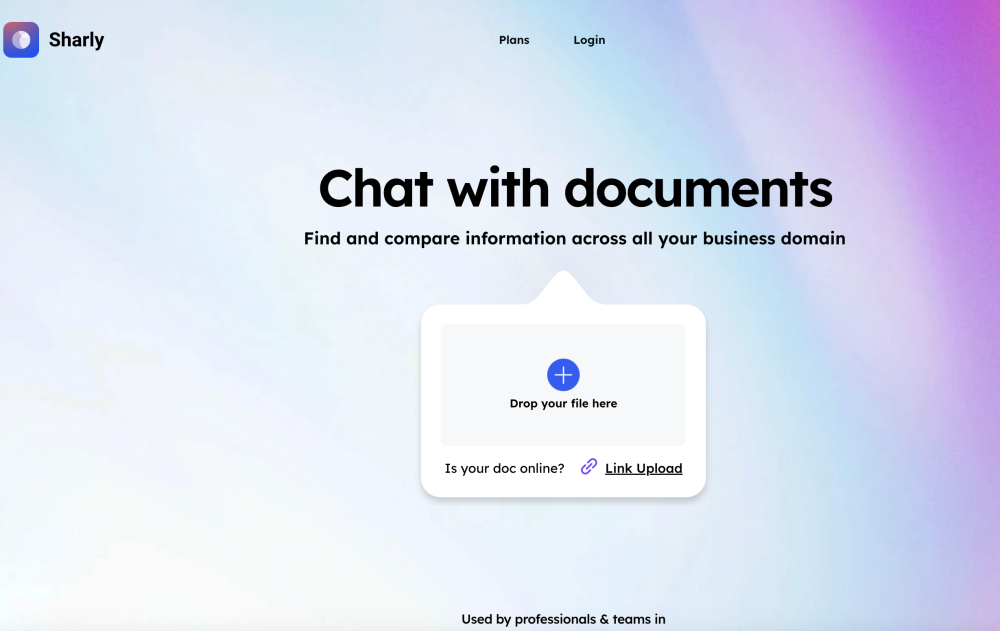

Engage in meaningful conversations with your documents and PDFs! Effortlessly ask questions, extract insights, and explore content like never before. With our innovative tool, transforming static information into interactive dialogue has never been easier, allowing you to enhance your understanding and productivity. Dive into your files and unlock their full potential today!

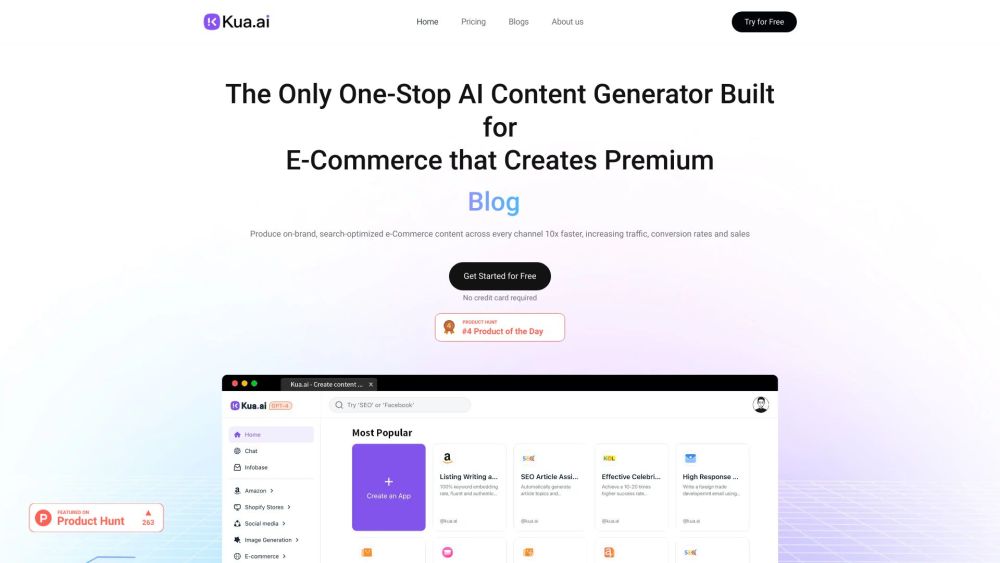

Unlock Ecommerce Success with AI-Powered Content Creation

In today’s competitive online marketplace, leveraging AI content generation can significantly enhance your ecommerce strategy. By automating and optimizing your content creation, you can engage customers more effectively, improve search engine rankings, and ultimately drive sales. Discover how harnessing the power of artificial intelligence can elevate your ecommerce business to new heights.

Elevate your assessment creation with our AI-powered tool, designed to effortlessly generate questions and exams directly from your content. This innovative solution streamlines the process, saving you time while enhancing the quality of your educational materials. Whether you're an educator, trainer, or content creator, our tool uses advanced algorithms to ensure your assessments are both relevant and compelling. Experience a new level of efficiency in exam preparation today!

Find AI tools in YBX

Related Articles

Refresh Articles