ChatGPT Search Tool is Susceptible to Manipulation and Deception

Most people like

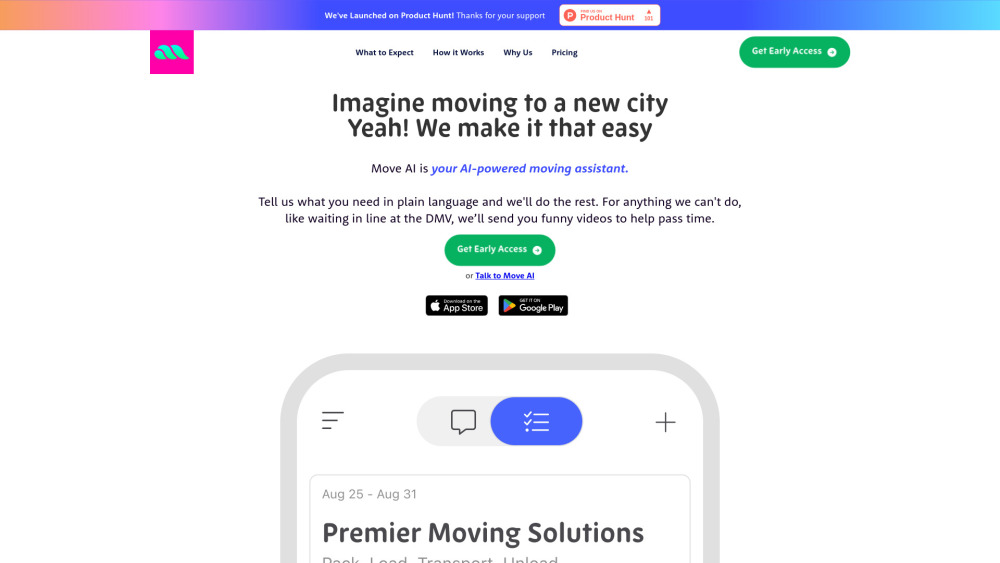

Are you overwhelmed by the thought of your upcoming move? Our AI-powered moving assistant is designed to simplify the relocation process, making it easier and more efficient. From organizing your moving tasks to finding the best services tailored to your needs, our intelligent platform offers personalized support every step of the way. Say goodbye to the chaos of moving and embrace a smoother, more enjoyable experience with our innovative AI technology. Let us help you turn your move into a seamless transition.

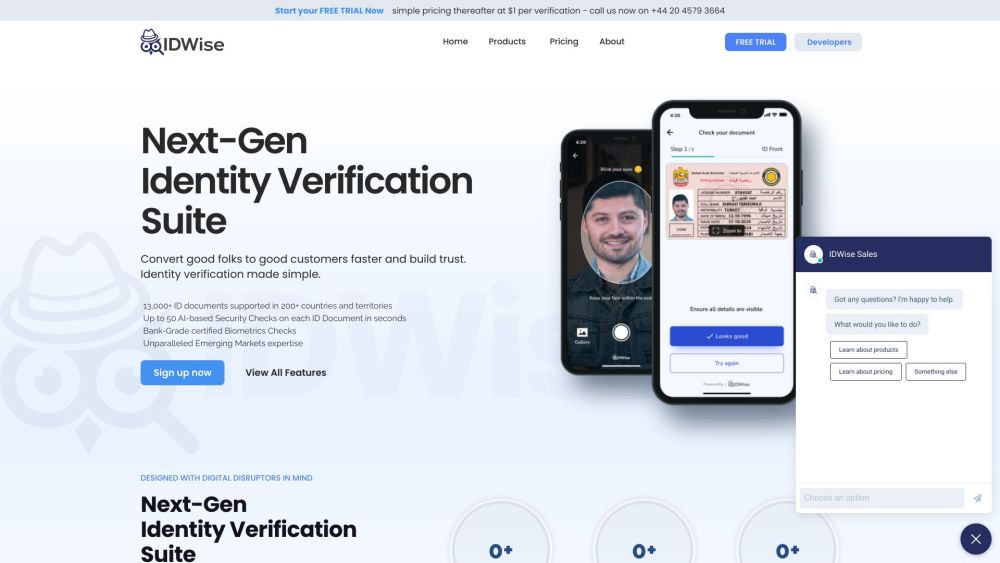

IDWise is an innovative AI-powered identity verification solution designed to assist businesses in seamlessly authenticating customer identities. With advanced technology, IDWise enhances security and builds trust, making identity verification efficient and reliable.

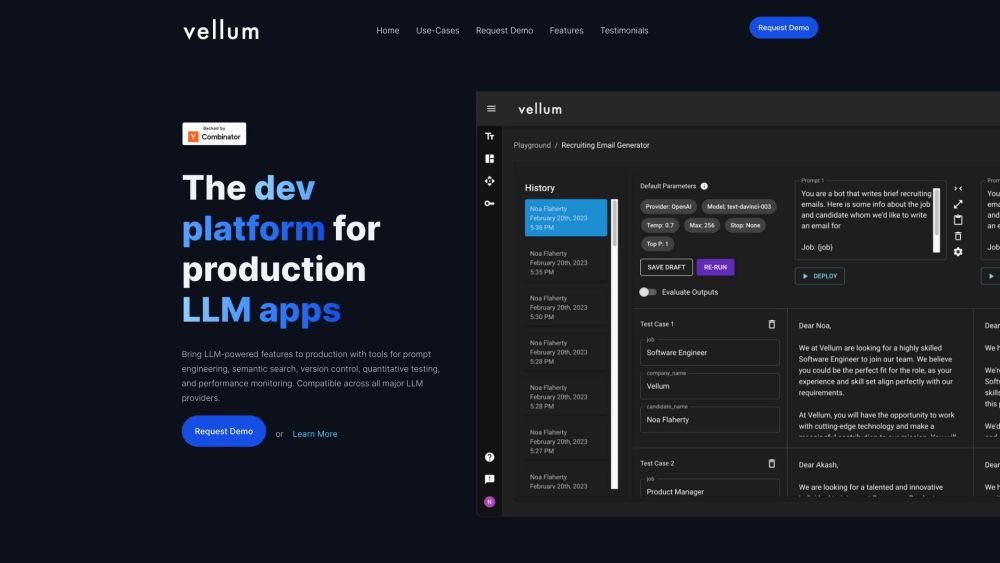

Introducing a cutting-edge development platform designed specifically for creating large language model (LLM) applications. This innovative platform streamlines the development process, providing developers with the tools and resources needed to build, test, and deploy powerful LLM-driven solutions efficiently. Whether you're a seasoned developer or just starting out, our platform offers the flexibility and support to bring your AI ideas to life. Join us in revolutionizing the way LLM applications are developed!

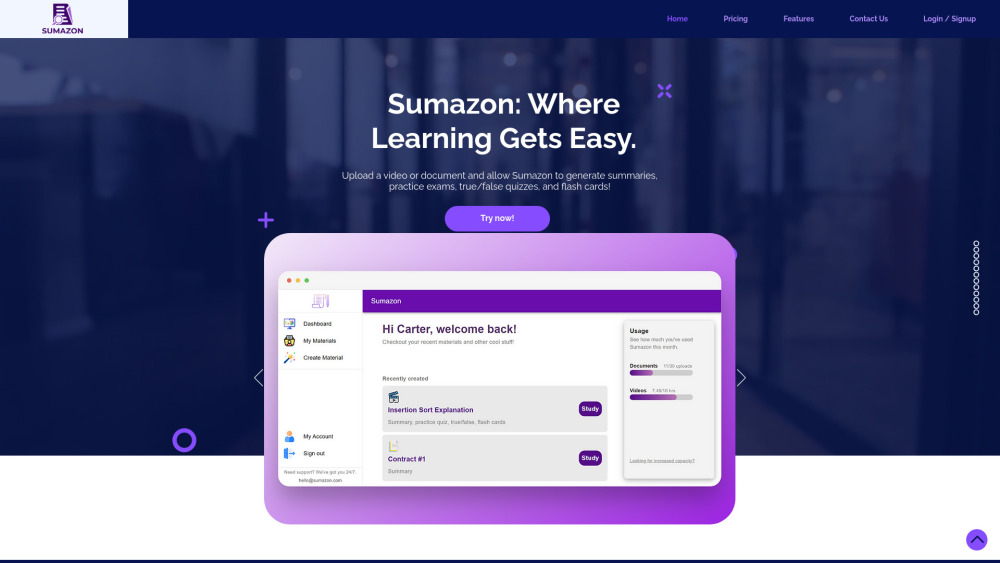

Introducing an innovative AI tool designed to streamline your study sessions by summarizing essential study materials and generating customized quizzes. This powerful tool helps students enhance their learning efficiency, retention, and exam preparation, making it an invaluable resource for anyone seeking academic success.

Find AI tools in YBX