Discover the Power Beyond Q&A: Vectara Unveils Innovative RAG-Powered Chat Module

Most people like

Discover Leo AI, the best artificial intelligence for students! The perfect tool to help you with your homework and revision.

In today's digital landscape, capturing attention is more crucial than ever. An AI video generator for text and photos allows you to easily convert written content and images into captivating videos. This innovative tool streamlines the creative process, enabling you to engage your audience with stunning visual narratives. Whether you're a content creator, marketer, or business owner, leveraging an AI video generator can elevate your storytelling and enhance your online presence. Discover how you can turn your text and photos into compelling videos that resonate with viewers and boost engagement.

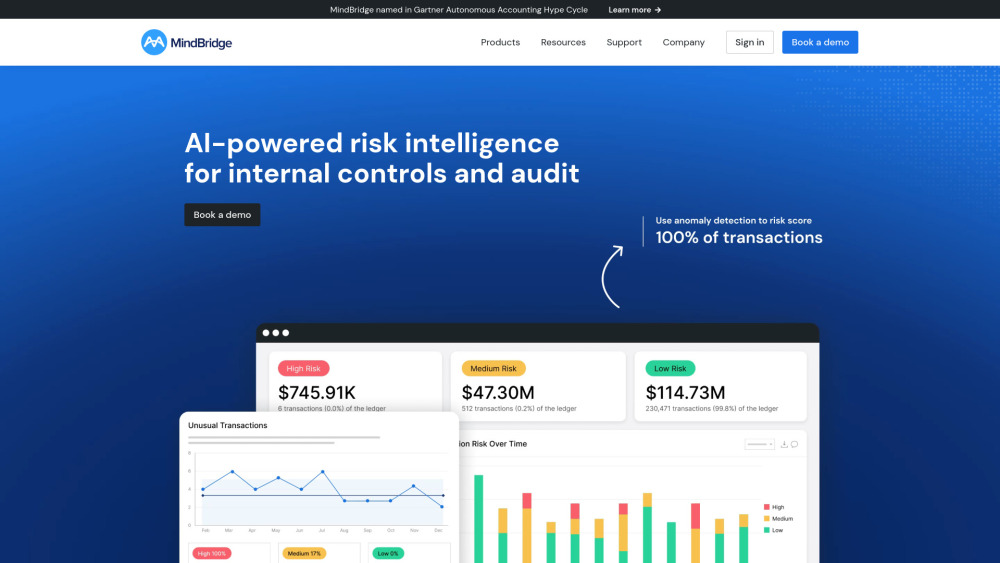

In today’s rapidly evolving financial landscape, navigating risks is crucial for success. As a global leader in financial risk discovery, we specialize in identifying and mitigating potential threats to assets and investments. Our innovative solutions empower businesses to safeguard their financial health and enhance decision-making through comprehensive risk assessment and management strategies. Join us as we redefine the standards of financial risk discovery to ensure your organization's resilience and growth.

Find AI tools in YBX

Related Articles

Refresh Articles