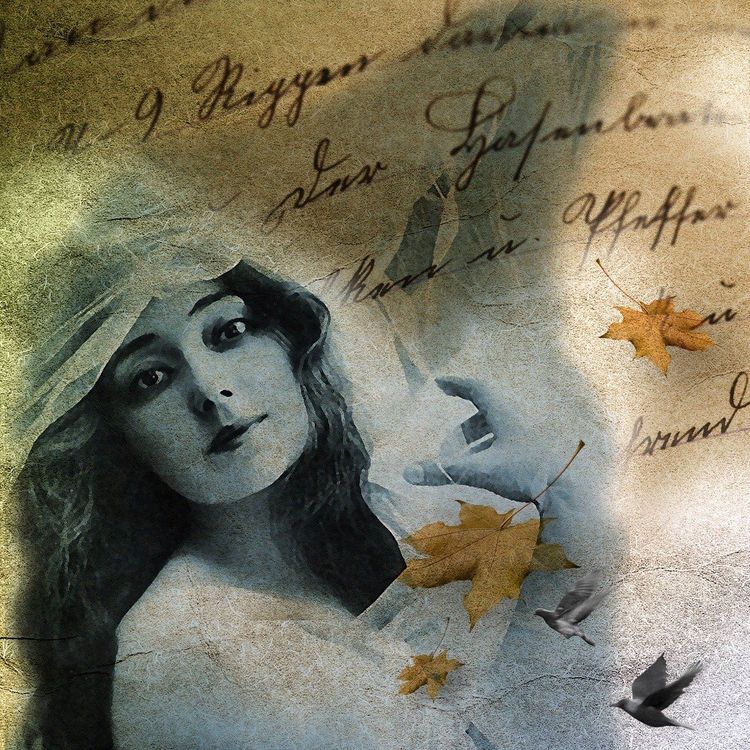

Elon Musk’s AI company xAI unveiled Grok 2, its latest language model, on Tuesday, introducing highly effective image generation capabilities that have sparked significant controversy on X.com (formerly Twitter).

Within hours of launch, users inundated X.com with AI-generated images featuring graphic violence, explicit sexual content, and manipulated photos of public figures in damaging contexts.

This surge in controversial content reflects X.com’s well-known hands-off approach to content moderation and marks a stark contrast to the stringent policies enforced by other top AI companies. Google, OpenAI, Meta, and Anthropic employ strict content filters and ethical guidelines in their image generation models to mitigate the creation of harmful or offensive material.

In contrast, Grok 2’s unregulated image generation capabilities exemplify Musk’s long-standing opposition to rigorous content oversight within social media platforms. By allowing Grok 2 to produce potentially offensive images without evident safeguards, xAI has reignited debates over technology companies' responsibilities in managing their innovations. This hands-off philosophy sharply contrasts with the industry’s growing emphasis on responsible AI development.

Grok 2’s release follows Google’s recent challenges with its own AI image generator, Gemini, which faced backlash for producing overly cautious outcomes that some labeled as "woke." Google admitted its efforts to promote diversity led to inaccuracies, resulting in the temporary suspension of Gemini’s image generation feature as it sought improvements.

Grok 2, however, seems to lack these restrictions, further emphasizing Musk’s resistance to content moderation. The model’s capabilities pose significant ethical questions, prompting discussions within the AI research community about the balance between rapid technological advancement and responsible development.

For enterprise decision-makers, Grok 2’s release highlights the crucial need for robust AI governance frameworks. As AI technologies become more powerful and accessible, companies must assess their ethical implications and potential risks. This incident serves as a cautionary tale for businesses considering advanced AI integration, stressing the importance of comprehensive risk assessments, strong ethical guidelines, and effective content moderation strategies to prevent reputational damage, legal liabilities, and loss of customer trust.

The incident may also accelerate regulatory scrutiny of AI technologies, leading to new compliance requirements for businesses leveraging AI. Technical leaders should monitor these developments closely and adapt their strategies as needed. This controversy underscores the importance of transparency in AI systems, encouraging companies to prioritize explainable AI and straightforward communication about their tools' capabilities and limitations.

As language models produce increasingly sophisticated content, the potential for misuse escalates. The Grok 2 release highlights the urgent need for industry-wide standards and enhanced regulatory frameworks governing AI development and deployment. Additionally, it reveals the limitations of current social media moderation strategies, as X.com’s hands-off policy faces challenges with the rising sophistication of AI-generated content.

This event marks a critical juncture in the discourse surrounding AI governance and ethics, illustrating the divide between Musk’s vision of unrestrained development and the more cautious approaches advocated by many in the tech industry. In the coming weeks, demands for regulation and industry standards will likely intensify, shaping the future of AI governance. Policymakers may feel pressured to act, potentially expediting the development of AI-focused regulations in the U.S. and beyond.

For now, X.com users confront an influx of AI-generated content that tests the limits of acceptability. This situation serves as a powerful reminder of the responsibilities associated with these technologies' development and deployment. As AI continues its rapid evolution, the tech industry, policymakers, and society must tackle the complex challenges of ensuring these powerful tools are utilized responsibly and ethically.