Emerging Open-Source AI Vision Model Challenges ChatGPT: Key Issues to Consider

Most people like

Introducing our cutting-edge AI writer designed to produce plagiarism-free content that goes undetected. Experience the power of advanced technology that ensures originality and creativity in every piece, catering to your unique writing needs while enhancing your online presence. Discover how our undetectable AI writer can elevate your content strategy effortlessly.

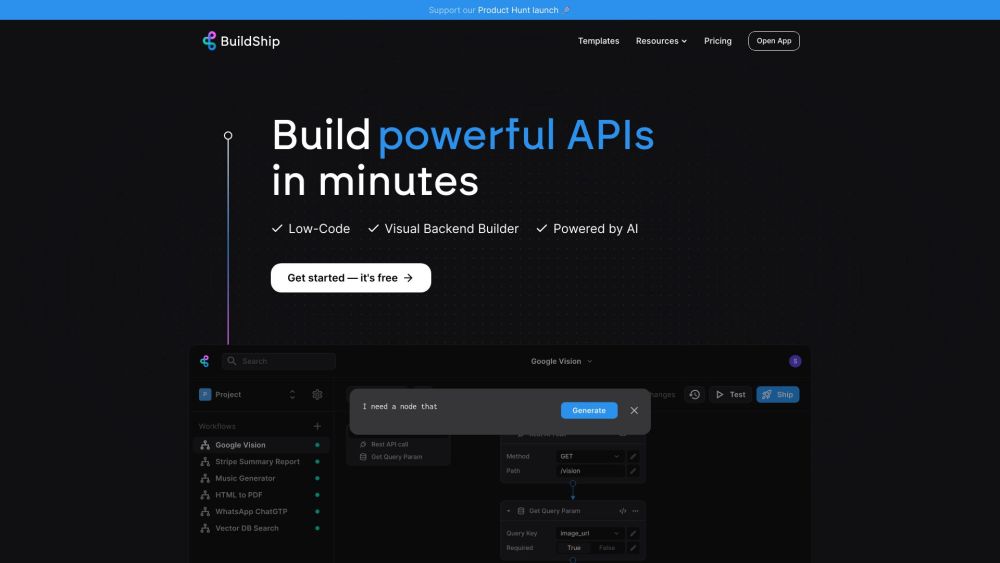

Discover a visual low-code platform designed to empower you with seamless API creation, efficient scheduled jobs, and streamlined backend tasks. Unlock the potential of your development process with intuitive tools that simplify complex workflows.

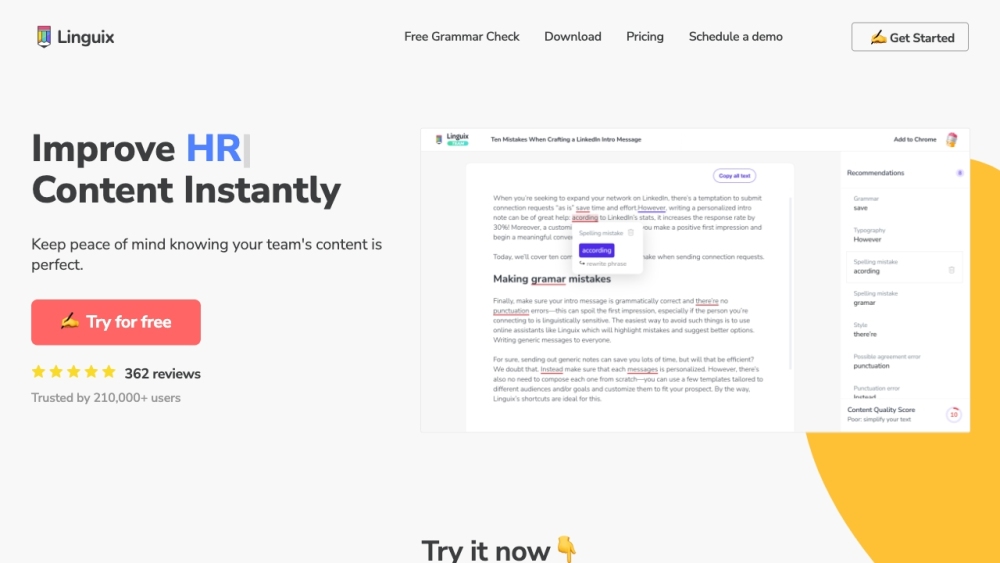

Linguix enhances your writing through advanced grammar and spell checking, efficient text rewriting, and a variety of additional features designed to polish your content.

Discover how AI-driven sports cameras are transforming the landscape of sports coverage, streaming, and analytics. These innovative systems enhance live broadcasting quality and provide in-depth performance analysis, giving fans and coaches unparalleled insights into every game. Join us as we explore the future of sports technology and the impact of automation in capturing the excitement of athletic events.

Find AI tools in YBX

Related Articles

Refresh Articles