Exploring Kimi Chat's Dark Side: 2 Million Words Input Support and Upcoming Multimodal Products Set for Release in 2024!

Most people like

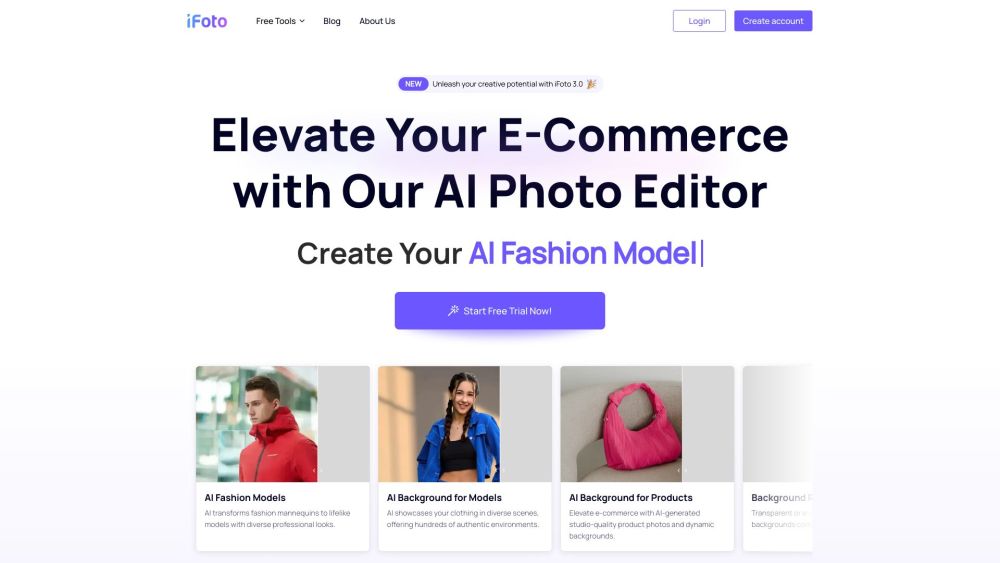

Discover a cutting-edge AI photo editor that offers advanced features to transform your images effortlessly. This powerful tool is designed for photographers and enthusiasts alike, making photo editing intuitive and accessible.

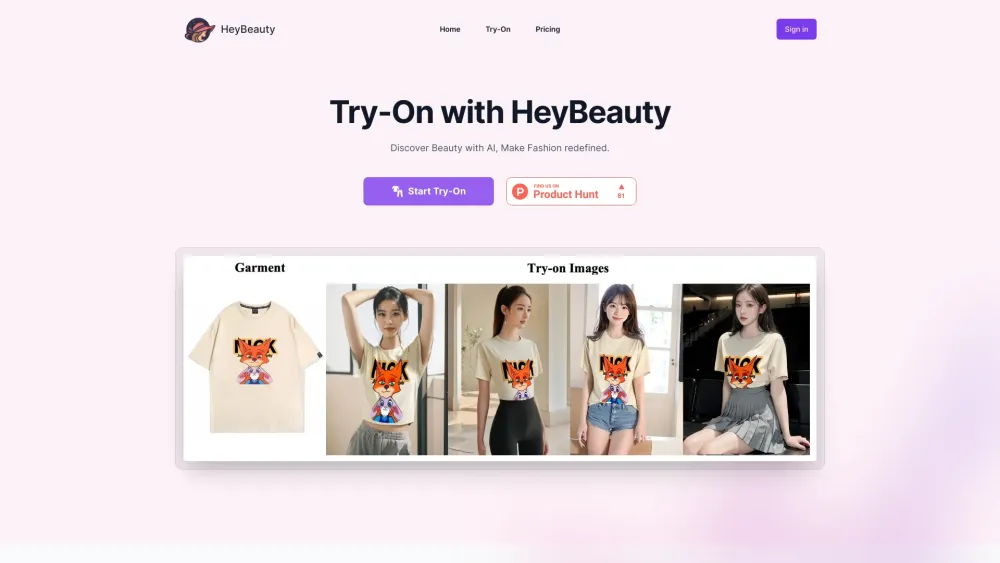

Transform Your Fashion Experience: Unleashing the Future of Style

In today's fast-paced world, the way we engage with fashion is rapidly evolving. Discover how innovative technologies and creative approaches are revolutionizing the fashion landscape, making it more personalized, accessible, and sustainable. Step into the future of style and learn how to enhance your fashion journey like never before.

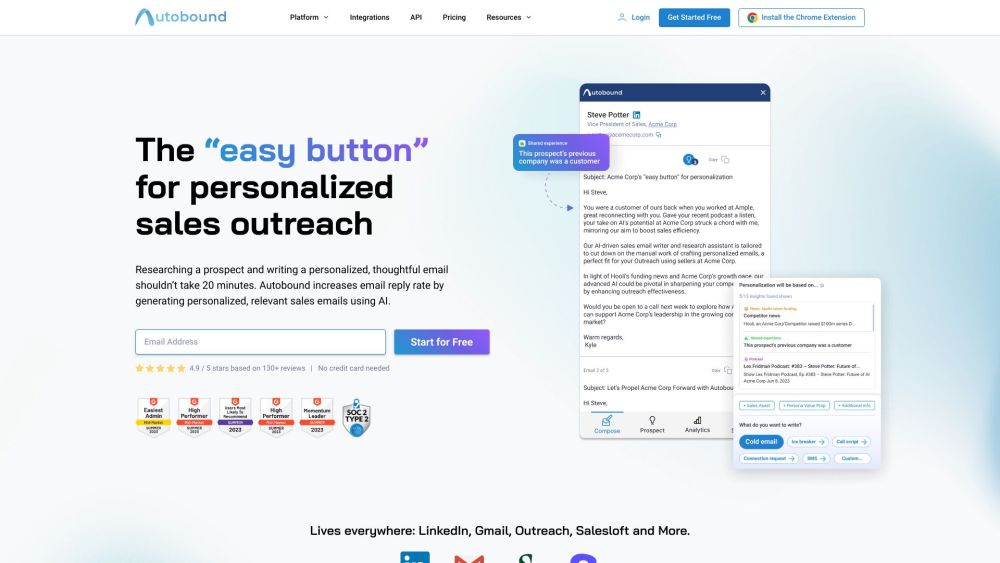

Introducing our AI-powered platform designed for hyper-personalized outreach. This innovative tool leverages advanced algorithms to tailor communication strategies, ensuring each interaction resonates with your audience. Experience the future of marketing as we transform how you connect with customers, making every message more relevant and impactful. Elevate your engagement efforts and drive conversions with our cutting-edge technology.

Unlock your creativity and transform your ideas into breathtaking visuals using AI prompts. Whether you're an artist, designer, or hobbyist, harnessing the power of artificial intelligence can elevate your image generation process. Discover how to create captivating images effortlessly and explore the potential of AI-driven tools to enhance your artistic vision. Transform your concepts into reality today!

Find AI tools in YBX

Related Articles

Refresh Articles