Google CEO Sundar Pichai Advocates for 'Sensible Regulation' in AI Development

Most people like

Whisper Memos is an innovative AI-driven application that transforms voice memos into accurate transcripts. Perfect for professionals and students alike, this tool enhances productivity by simplifying the process of documenting thoughts and ideas.

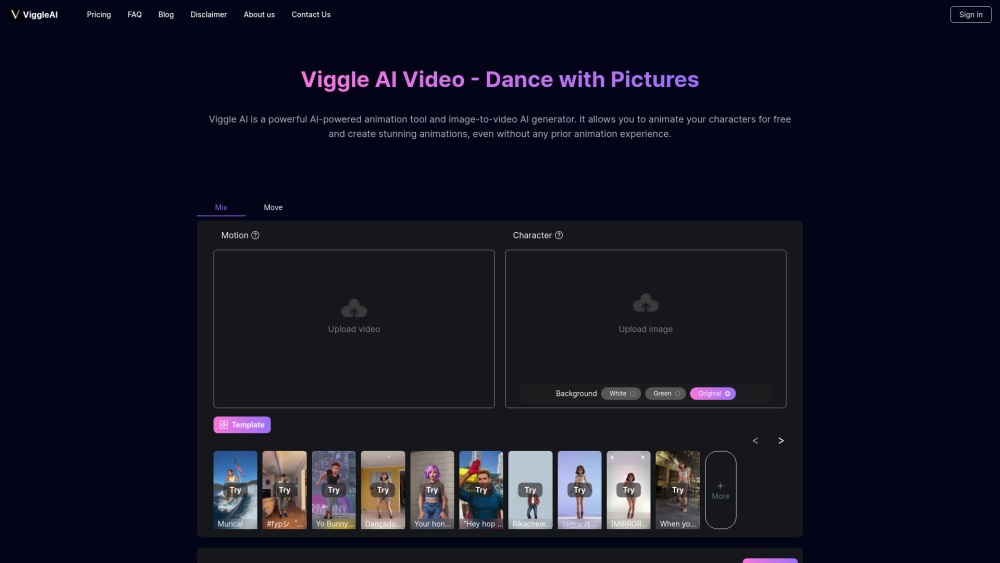

Transforming static images into captivating videos has never been easier, thanks to the innovative AI image-to-video generator. This cutting-edge technology leverages artificial intelligence to animate your visual content, allowing you to breathe life into photographs and illustrations. Whether you're a content creator, marketer, or simply looking to enhance your digital storytelling, this tool opens up a world of creative possibilities. Discover how the AI image-to-video generator is reshaping the way we visualize and share our ideas through dynamic visuals.

Find AI tools in YBX

Related Articles

Refresh Articles