Google Develops AI to Support and Assist Individuals with Speech Impairments

Most people like

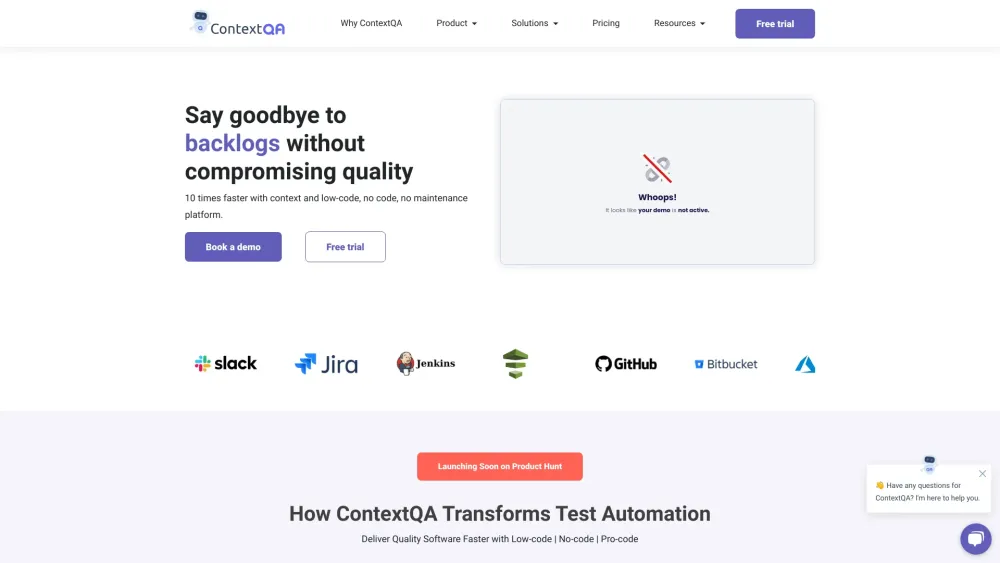

Streamline Your Manual Testing Reporting Process for Enhanced Software Quality

Discover how automating your manual testing reporting can significantly boost your software quality. By eliminating tedious tasks and increasing efficiency, you can focus more on developing robust applications and delivering superior user experiences.

Welcome to our AI insights platform, designed specifically for creators and businesses looking to harness the power of artificial intelligence. Our platform provides valuable data-driven insights that empower users to enhance creativity, improve decision-making, and drive growth. Discover how AI can transform your projects and strategies, paving the way for success in today's competitive landscape. Explore our features to unlock your full potential!

Find AI tools in YBX

Related Articles

Refresh Articles