Google Unveils New ‘Open’ AI Models Prioritizing Safety Features

Most people like

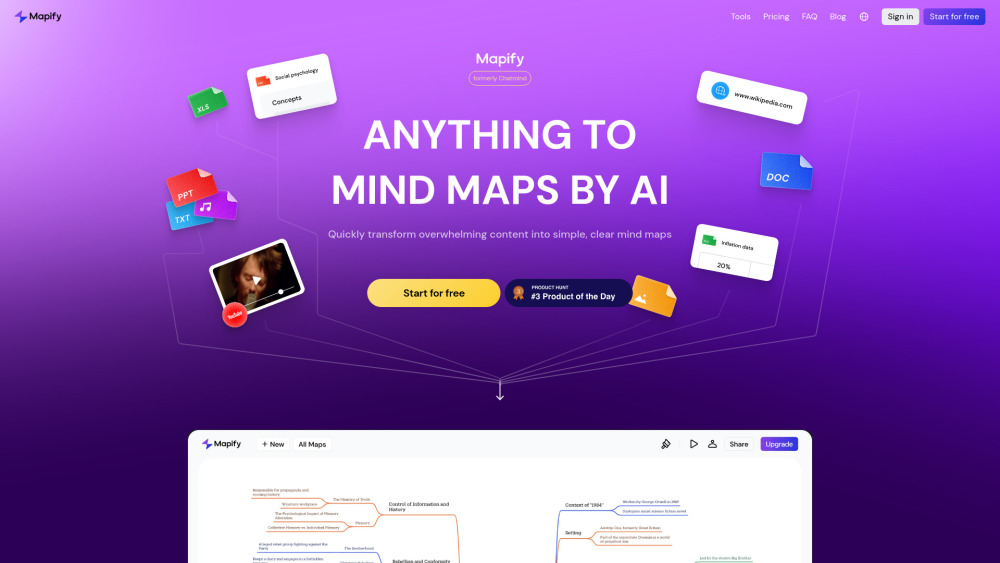

Discover our free online AI-powered mind mapping tool, designed to enhance your brainstorming sessions and boost creativity. With intuitive features and advanced algorithms, this tool helps you visually organize your thoughts and ideas effortlessly. Whether you’re planning a project, outlining a story, or studying complex topics, our mind mapping tool streamlines your process and transforms chaotic thoughts into structured, actionable plans. Unlock the power of your imagination and elevate your productivity with our easy-to-use, AI-driven platform.

Introducing our AI homework helper, designed to provide you with precise solutions and guidance for all your academic needs. Whether you're tackling complex math problems, writing essays, or conducting research, our intelligent tool will enhance your learning experience by delivering accurate and reliable answers. Unlock your academic potential today!

Introduction:

Discover the ultimate online tool for creating realistic deepfake face swaps effortlessly. Whether you're looking to enhance your video content, craft engaging visuals, or explore the fascinating world of deepfake technology, our user-friendly platform empowers you to swap faces in a seamless and convincing manner. Dive into the exciting possibilities of deepfake creation today!

Find AI tools in YBX

Related Articles

Refresh Articles