How This Founder Trained His AI to Avoid Rickrolling Users

Most people like

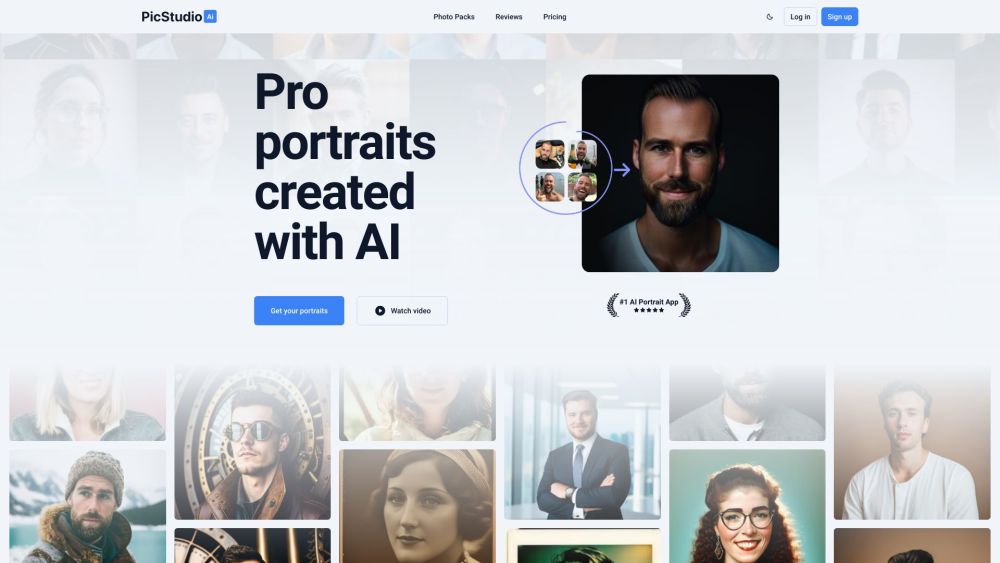

Transform your photos into stunning professional portraits in just minutes with advanced AI technology. Discover how easy it is to elevate your images and create eye-catching visuals that stand out. Perfect for social media, personal branding, or special occasions, our AI-powered solution delivers exceptional results quickly and effortlessly.

DocumentPro streamlines data entry by efficiently extracting critical information from both documents and emails. By automating these processes, it helps you save time and reduce errors, enhancing productivity in your workflow.

Unlock the power of an AI research assistant designed specifically for streamlined data analysis. Our innovative tool harnesses advanced algorithms to enhance your research capabilities, making data insights quicker and more accessible than ever before. Whether you're sifting through large datasets or seeking to optimize your findings, this AI assistant is your key to efficient and effective analysis.

In today's digital landscape, efficiently gathering and analyzing data from websites is crucial for businesses and researchers alike. An AI-powered web scraper automates this process, utilizing advanced algorithms and machine learning techniques to extract relevant information quickly and accurately. This innovative technology not only saves time but also enhances data quality, making it an invaluable tool for data-driven decision-making. Whether you're building a competitive analysis, monitoring market trends, or collecting research data, AI web scrapers streamline the process, offering a smarter solution for navigating the vast expanse of the internet.

Find AI tools in YBX