Introducing Video-ChatGPT: Enhance Your Video Editing Experience with Engaging Tools

Most people like

Discover an AI-driven stock image library that offers a vast collection of royalty-free images, effortless downloads, and regular updates to keep your projects fresh and engaging. Enhance your creative work with our innovative image generator!

Introducing our AI receptionist designed specifically for clinics, expertly managing calls and bookings around the clock, 24/7. Enhance your clinic's efficiency and patient experience with our intelligent, automated solution that never sleeps.

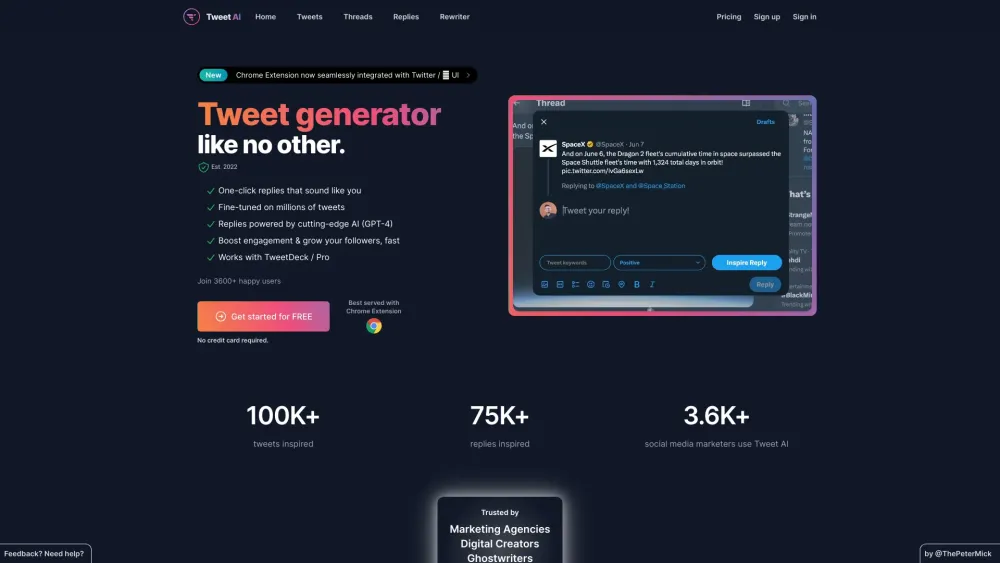

Increase Sales and Engagement on X

In today's competitive market, enhancing sales and boosting audience engagement on X is more crucial than ever. This platform offers unique opportunities to connect with your target audience, drive conversions, and build lasting relationships. By implementing effective strategies tailored to maximize your presence on X, you can elevate your brand's visibility and achieve remarkable results. Ready to transform your approach? Let’s explore how to optimize your sales and engagement on X!

Find AI tools in YBX