Meta Launches Llama 3: Claims It's One of the Top Open Models on the Market

Most people like

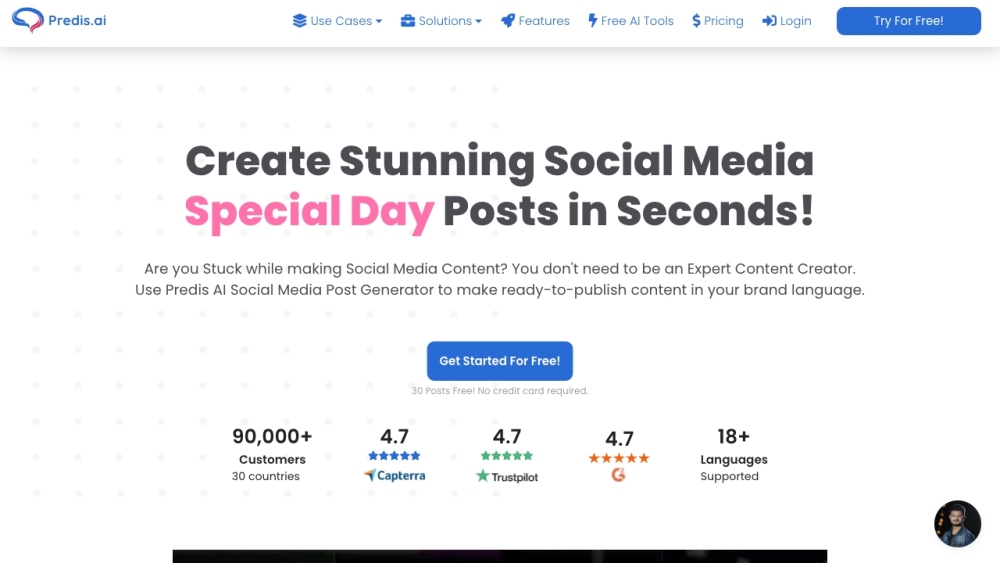

Predis.ai is an advanced AI marketing tool designed for social media, specializing in content creation and insightful analysis. Whether you're looking to enhance your online presence or streamline your marketing strategies, Predis.ai empowers you to engage your audience effectively.

Guard Your Users Against Harmful Content

In today's digital landscape, safeguarding your users from harmful content is more crucial than ever. As online interactions increase, the risk of exposure to inappropriate or dangerous material also rises. By implementing robust content moderation strategies, you not only protect your audience but also enhance their overall experience, building trust and loyalty to your platform. Prioritize user safety and create a secure online environment today.

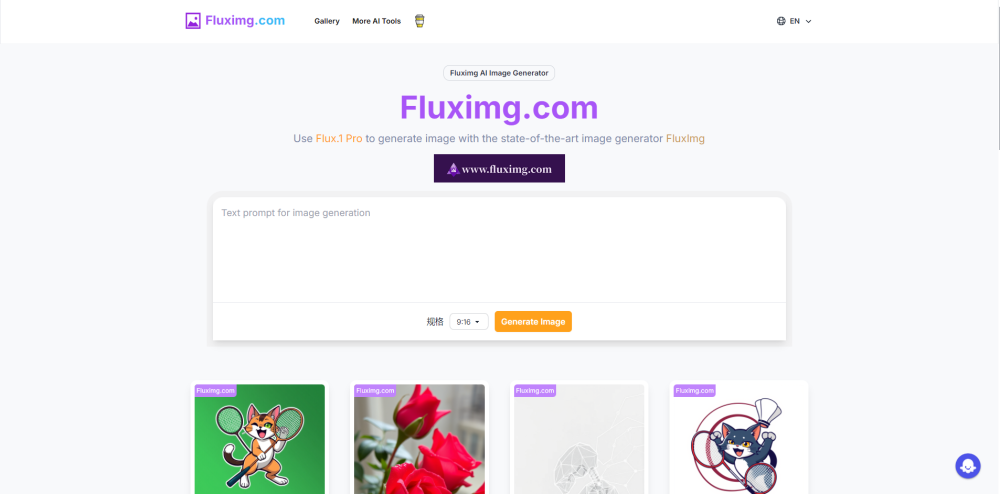

Find AI tools in YBX