Meta's Llama 3.2: A New Era in Multimodal AI

Today at Meta Connect, the company unveiled Llama 3.2, its first major vision model that integrates the understanding of both images and text.

Llama 3.2 features small and medium-sized models (11B and 90B parameters) and more lightweight text-only models (1B and 3B parameters) optimized for mobile and edge devices.

“This is our first open-source multimodal model,” proclaimed Meta CEO Mark Zuckerberg during his keynote. “It will enable a wide range of applications requiring visual comprehension.”

Similar to its predecessor, Llama 3.2 offers an extensive 128,000 token context length, allowing input of substantial text, equivalent to hundreds of textbook pages. Higher parameter counts typically enhance model accuracy and capability in handling complex tasks.

Meta also introduced official Llama stack distributions today, enabling developers to leverage these models in various environments, including on-premises, on-device, cloud, and single-node setups.

“Open source is — and will continue to be — the most cost-effective, customizable, and reliable option available,” stated Zuckerberg. “We’ve reached an inflection point in the industry; it's becoming the standard, akin to the Linux of AI.”

Competing with Claude and GPT-4o

Just over two months after launching Llama 3.1, Meta reports a tenfold growth in its capabilities.

“Llama continues to advance rapidly,” noted Zuckerberg. “It’s unlocking an increasing range of functionalities.”

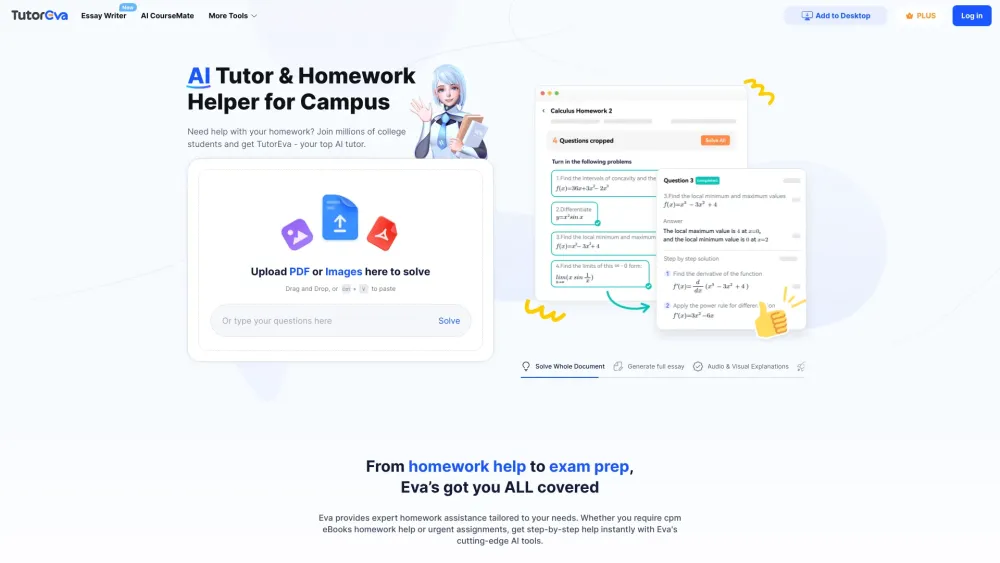

The two largest models in Llama 3.2 (11B and 90B) now support image usability, enabling them to interpret charts, generate image captions, and identify objects from natural language prompts. For example, users can inquire about their company’s peak sales month, and the model can deduce an answer using available graphs. The larger models can extract information from images to create detailed captions.

The lightweight models facilitate the development of personalized apps for private use, such as summarizing recent communications or managing calendar invites for follow-up meetings.

Meta asserts that Llama 3.2 is competitive with Anthropic’s Claude 3 Haiku and OpenAI’s GPT-4o-mini in image recognition and visual understanding tasks. Notably, it outperforms competitors like Gemma and Phi 3.5-mini in instruction following, summarization, tool utilization, and prompt rewriting.

Llama 3.2 models are available for download on llama.com, Hugging Face, and across Meta’s partner platforms.

Expanded Business AI and Engaging Consumer Features

Meta is also enhancing its business AI, enabling enterprises to utilize click-to-message ads on WhatsApp and Messenger. This includes developing agents capable of answering common queries, discussing product details, and completing purchases.

The company reports that over 1 million advertisers are utilizing its generative AI tools, resulting in 15 million ads created in the past month. On average, ad campaigns using Meta’s generative AI experience an 11% increase in click-through rates and a 7.6% rise in conversion rates.

For consumers, Meta AI is gaining a “voice,” specifically several celebrity voices, including Dame Judi Dench, John Cena, Keegan-Michael Key, Kristen Bell, and Awkwafina.

“I believe voice will be a more natural way of interacting with AI than text,” Zuckerberg stated. “It’s just much better.”

The model can respond to voice or text commands in celebrity voices across platforms like WhatsApp, Messenger, Facebook, and Instagram. Meta AI will also respond to shared photos, with the ability to edit images by adding or modifying backgrounds. Additionally, Meta is experimenting with new translation, video dubbing, and lip-syncing tools for Meta AI.

Zuckerberg reinforced that Meta AI is set to become the most widely used assistant globally, claiming, “It’s probably already there.”