MIT Study Reveals Labeling Errors in Datasets Used for AI Testing

Most people like

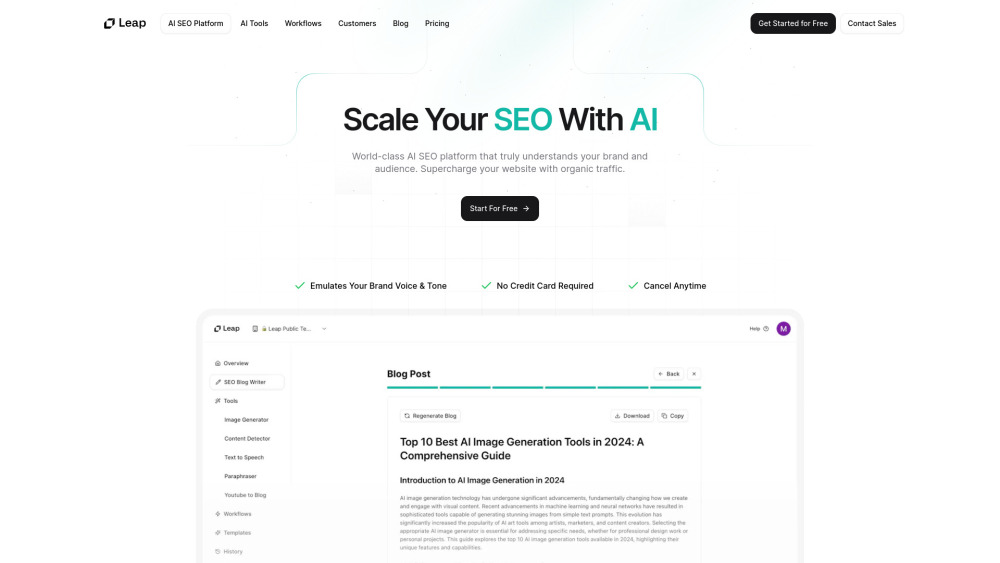

Unlock the potential of your online presence with our advanced AI SEO tool, designed specifically to help you produce high-quality SEO content. Enhance your website's visibility and engagement by leveraging cutting-edge algorithms that analyze trends and optimize your writing for search engines. Create compelling, relevant, and keyword-rich content that resonates with your audience while improving your ranking on search results. Embrace the future of content creation and watch your visibility soar!

In recent years, the emergence of advanced text-to-image models has revolutionized the field of artificial intelligence and creative content generation. These sophisticated systems leverage deep learning techniques to transform textual descriptions into stunning visual representations. By understanding the nuances of language and context, these models empower artists, marketers, and creators to dynamically bring their ideas to life. In this article, we delve into the mechanics, applications, and future potential of text-to-image technology, showcasing its impact on various industries and creative practices.

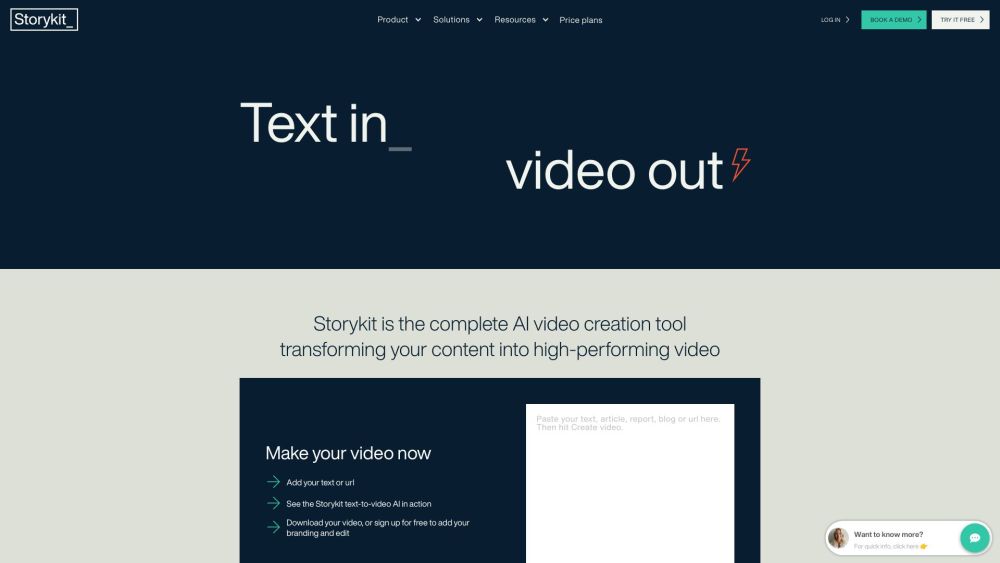

Elevate Your Content: Transforming it into High-Performing Video

In today's digital landscape, video content reigns supreme, driving engagement and boosting reach across platforms. By transforming your written material into compelling videos, you not only enhance audience interaction but also maximize your content's visibility. Let’s explore how to effectively convert your content into captivating, high-performing videos that resonate with viewers and elevate your brand presence.

Find AI tools in YBX