OpenAI and Anthropic Collaborate to Share AI Models with the U.S. AI Safety Institute for Enhanced Safety and Oversight

Most people like

In today's fast-paced educational landscape, many students are turning to AI assistance for homework to enhance their learning experience. This cutting-edge technology offers personalized support, helping students tackle complex assignments and grasp challenging concepts with greater ease. By leveraging AI tools, learners can boost their productivity, improve understanding, and ultimately achieve better academic results. Discover how AI is transforming traditional study methods and making homework more manageable and effective for students of all ages.

Enhance your music comprehension and streamline your organization with our comprehensive guide. Discover effective techniques that will not only clarify your musical experience but also help you efficiently manage your music collection. Whether you're a budding musician or an avid listener, mastering these strategies will transform how you approach and enjoy music.

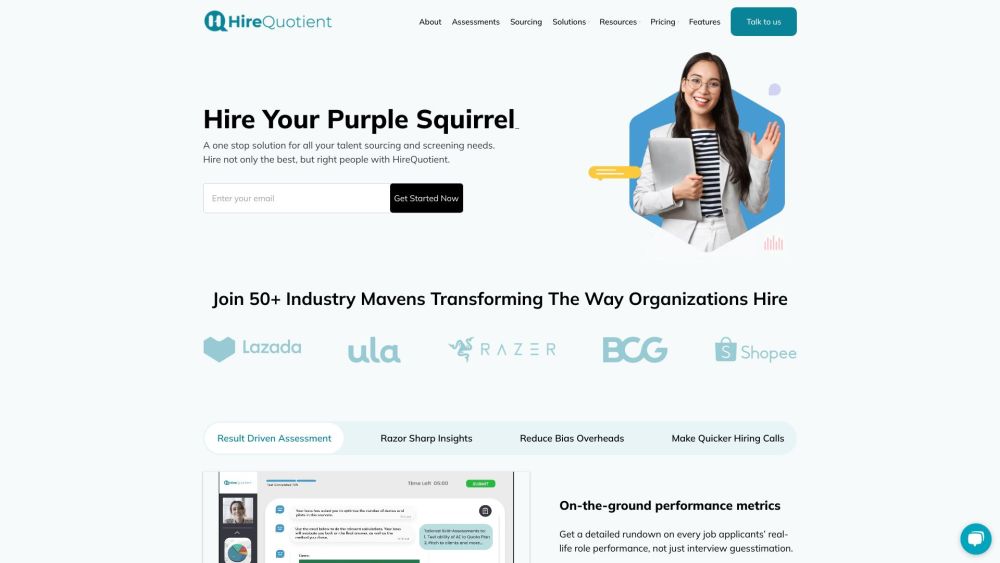

HireQuotient is an innovative platform designed specifically for non-tech hiring that streamlines and automates the recruitment process from start to finish.

Discover the power of white-label AI SaaS solutions designed for creating and reselling intelligent AI agents. Transform your business offerings and enhance customer experiences with our customizable platform tailored to meet your unique needs. Explore how our innovative technology can elevate your brand and drive revenue growth.

Find AI tools in YBX