OpenAI and Anthropic to Collaborate with US Government by Sharing Their AI Models

Most people like

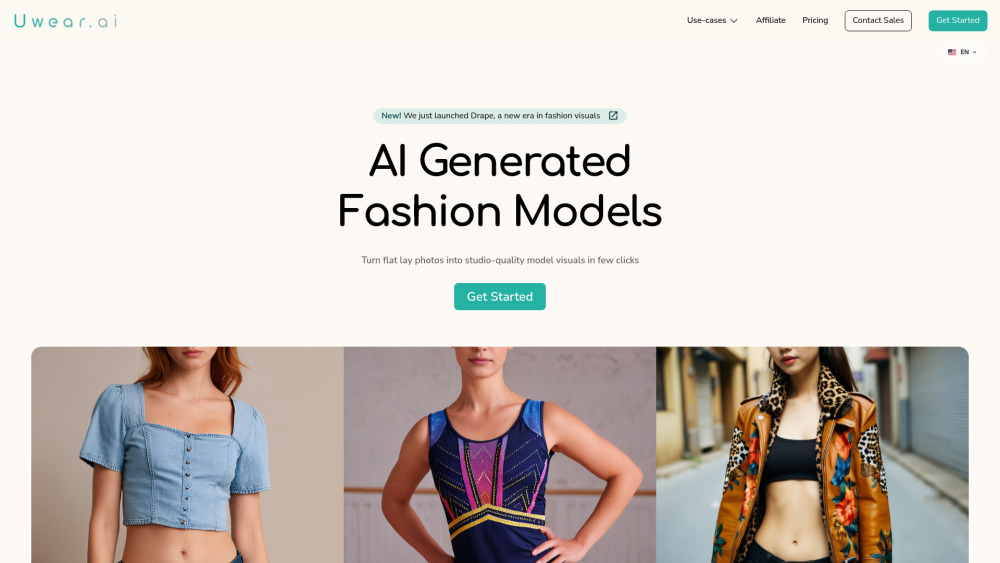

Transform flat-lay images into stunning on-model fashion photos with the power of AI. This innovative technology bridges the gap between static imagery and dynamic fashion, allowing designers, retailers, and brands to showcase their creations in a more engaging way. Dive into the world of AI-driven fashion photography and elevate your visual marketing strategy today!

In today's rapidly evolving work environment, fostering employee engagement is crucial for organizational success. An AI-powered employee engagement platform leverages advanced technology to enhance motivation, collaboration, and overall productivity within teams. By utilizing data-driven insights and personalized strategies, this innovative solution empowers businesses to create a more connected and motivated workforce, ultimately driving performance and retention. Explore how an AI-focused approach can transform your organization’s engagement strategies and lead to a thriving workplace culture.

Find AI tools in YBX