OpenAI Creates Text Detection AI: Debate on Public Release

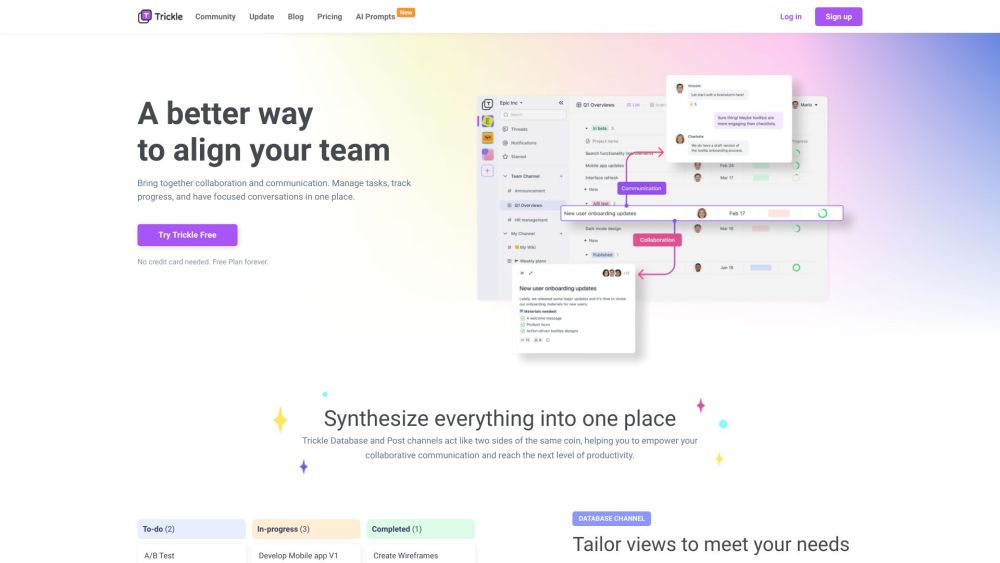

Most people like

Notion is a powerful all-in-one workspace that seamlessly combines wiki functionality, document creation, and project management tools.

Unlocking Precise Spatial Intelligence with AI-Enabled Geospatial Solutions

Discover how cutting-edge AI-driven geospatial solutions are transforming spatial intelligence. By leveraging advanced algorithms and data analytics, these solutions provide unparalleled accuracy and insights, empowering industries to make informed decisions based on precise geographical data. Engage with the future of spatial analysis and enhance your understanding of our world's complexities.

Magickimg is an innovative AI-powered platform that offers advanced image editing tools, empowering users to enhance and transform their visuals effortlessly.

Find AI tools in YBX