OpenAI Faces Potential Halt on ChatGPT Releases Amid FTC Complaint

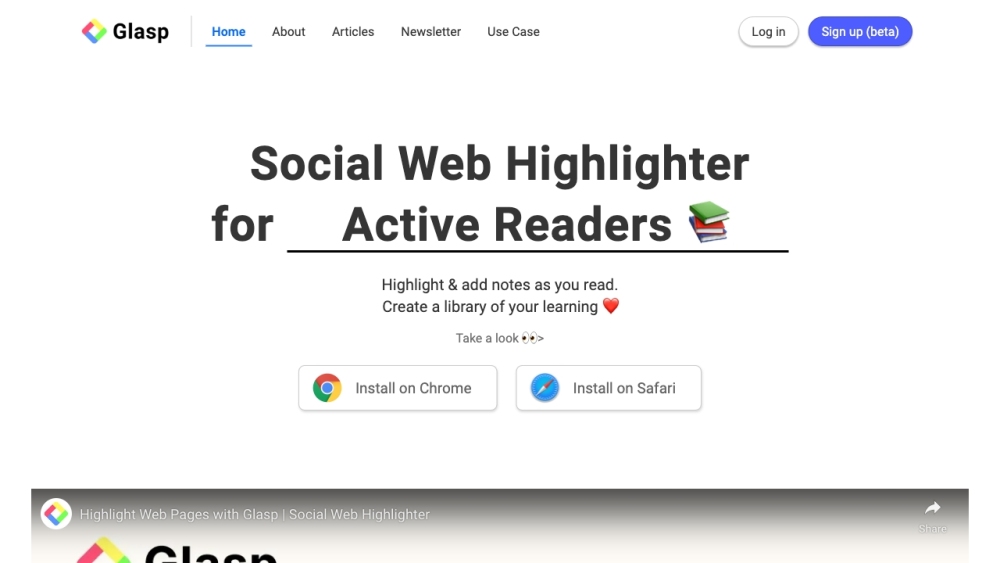

Most people like

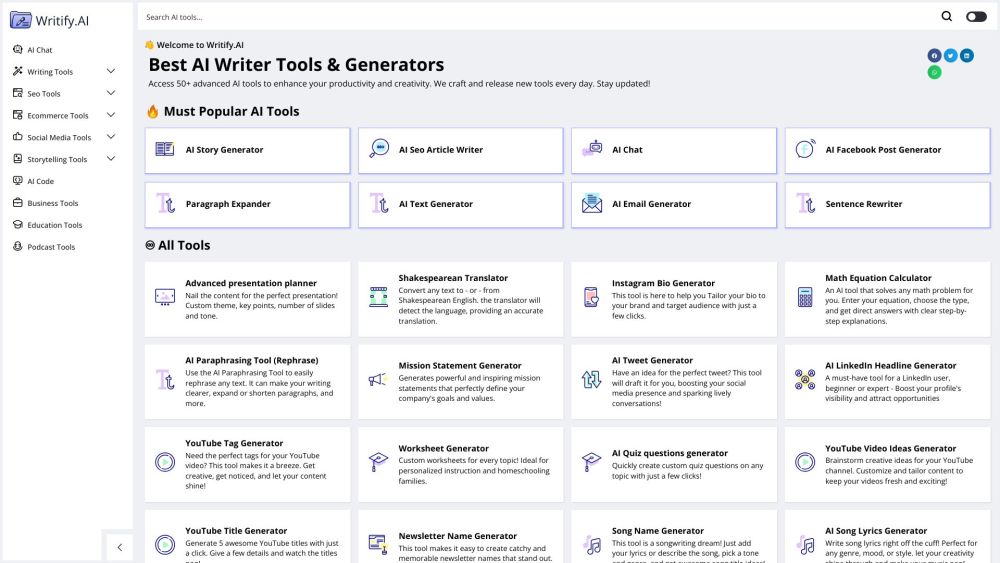

Unlock Your Potential with Our AI Tool Suite for Enhanced Productivity and Creativity

Discover how our powerful AI tool suite can transform your workflow, boost productivity, and ignite your creative spark. Tailored for individuals and teams alike, these innovative solutions streamline tasks, enhance collaboration, and inspire original ideas. Explore the future of work with cutting-edge AI technologies designed to maximize your efficiency and foster creativity in every project. Embrace a smarter way to achieve your goals today!

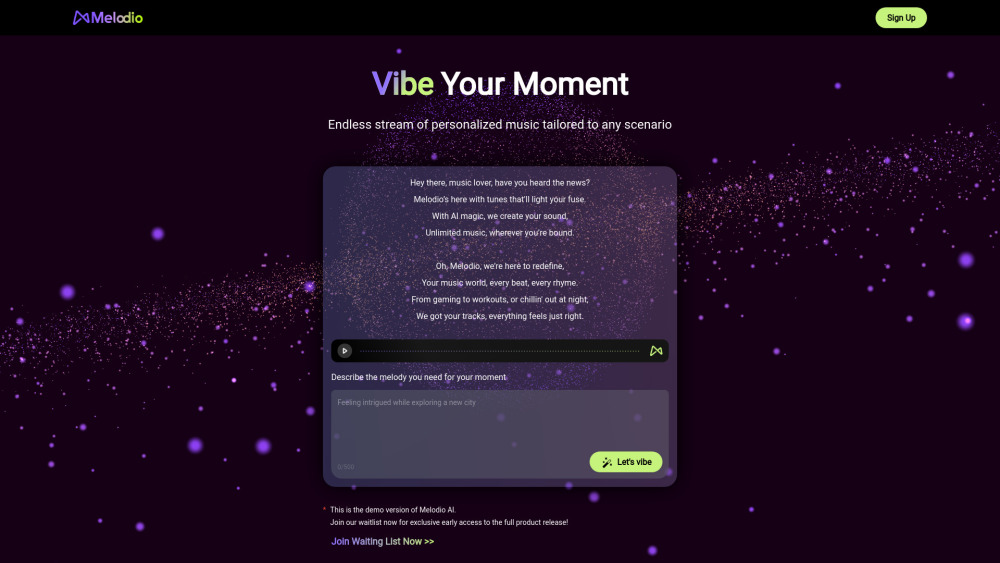

Discover the ultimate personalized AI music companion designed to enhance your listening experience. This innovative tool tailors music recommendations to your unique tastes, creating a custom soundtrack just for you. With advanced algorithms, it learns from your preferences and provides curated playlists that resonate with your mood, ensuring every note is perfectly suited to your vibe. Embrace the future of music with an AI companion that evolves alongside your musical journey, making every listening session uniquely yours.

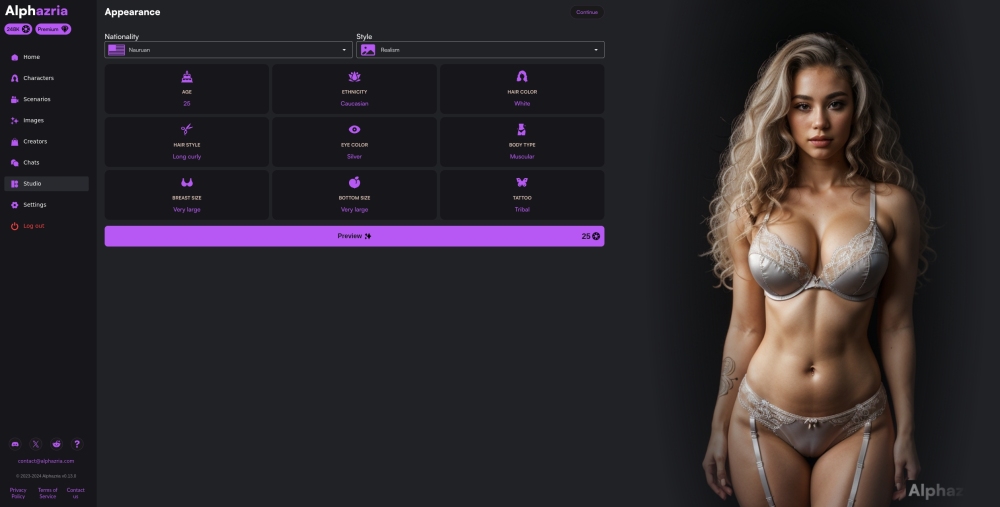

Explore the world of AI-generated adult content designed exclusively for mature audiences. Immerse yourself in innovative and engaging experiences tailored to adult preferences.

Find AI tools in YBX