OpenAI is Training GPT-5: Three Major Upgrades to Anticipate for the Successor to GPT-4

Most people like

Discover the power of an AI image generator that creates stunning, high-quality images filled with intricate details. Unlock your creative potential with this advanced tool designed for artists, marketers, and content creators seeking visually striking imagery.

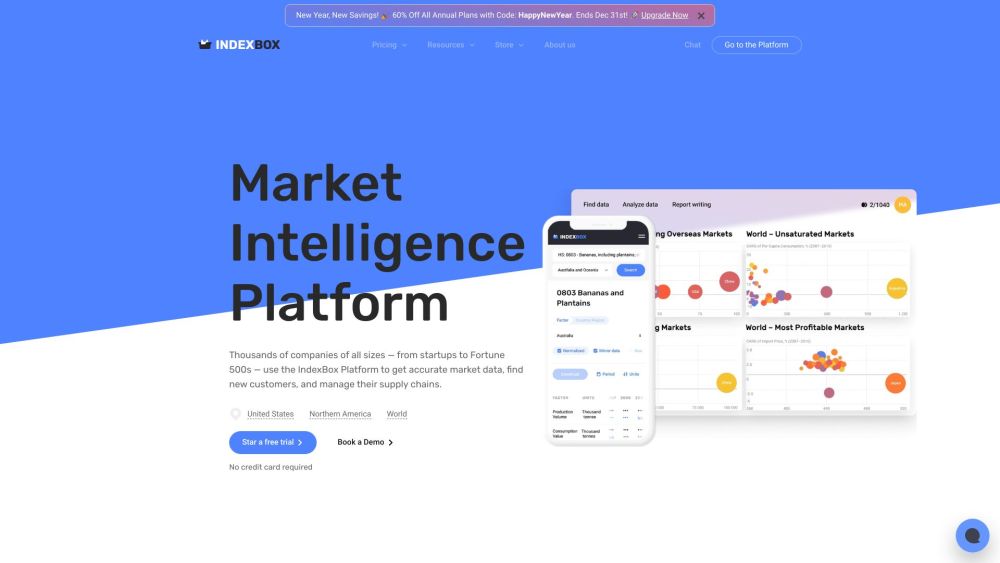

Introducing our AI-driven market intelligence platform, designed to empower businesses with actionable insights and data-driven strategies. This innovative tool harnesses advanced analytics and artificial intelligence to provide a comprehensive understanding of market trends, consumer behavior, and competitive landscapes. Whether you're a seasoned entrepreneur or a growing startup, our platform equips you with the necessary resources to make informed decisions and drive success in today's dynamic marketplace. Experience the future of business intelligence with our cutting-edge solution.

Create No-Code Chatbots Effortlessly

Are you looking to develop a chatbot but lack coding skills? Discover the power of no-code platforms that empower you to build sophisticated chatbots with ease. In this guide, we’ll walk you through the process of creating chatbots without writing a single line of code, making chatbot development accessible for everyone. Unlock the potential of automated conversations and enhance user engagement today!

Find AI tools in YBX

Related Articles

Refresh Articles