OpenAI Launches Training for Next-Gen Model — GPT-5 Expected in 90+ Days

Most people like

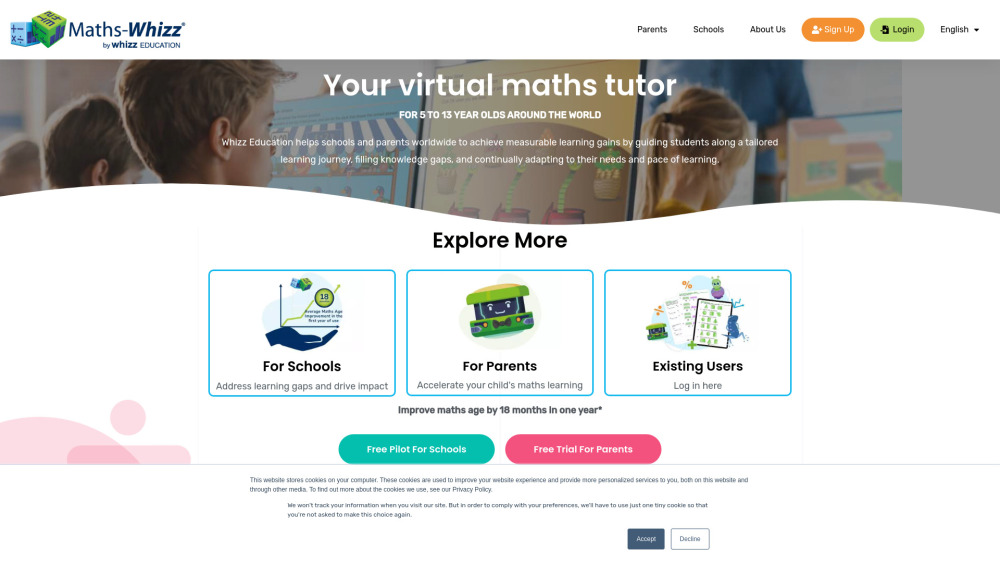

Introducing our AI-powered virtual math tutor designed specifically for children aged 5 to 13. This innovative tool provides personalized learning experiences, helping young learners grasp mathematical concepts in an engaging way. With interactive lessons, real-time feedback, and tailored practice exercises, our AI tutor nurtures confidence and fosters a love for mathematics. Whether your child needs help with basic arithmetic or more advanced topics, our virtual tutor adapts to their individual learning pace, making math enjoyable and accessible for every child.

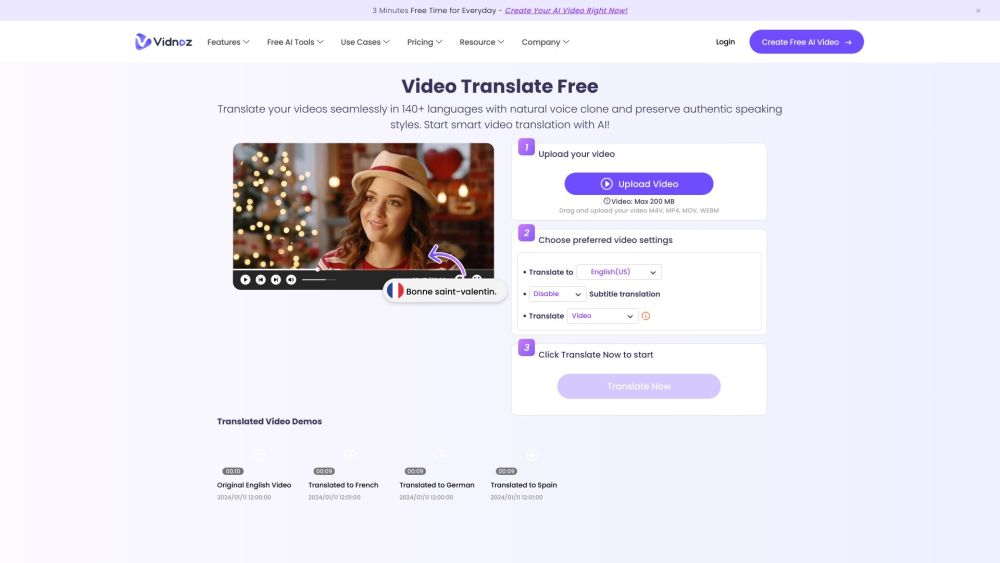

Translate Videos into 140+ Languages in Just 3 Simple Steps!

Unlock the global potential of your content with our easy-to-follow process for translating videos into over 140 languages. Whether you're aiming to reach a broader audience or enhance audience engagement, our streamlined approach ensures that your messages resonate with viewers around the world. Say goodbye to language barriers and welcome a more connected, multilingual audience!

Introducing an AI-Powered Platform for Enhanced Video Accessibility

In today’s digital world, ensuring video accessibility is crucial for engaging diverse audiences. Our innovative AI-driven platform transforms video content by automatically generating captions, transcripts, and translations, making it accessible to everyone, including individuals with hearing impairments and non-native language speakers. Join us in making video content universally accessible, unlocking the full potential of your media for all viewers.

Discover an AI-driven tool designed specifically for creating stunning anime-style artwork. This innovative platform harnesses advanced technology to bring your artistic visions to life, whether you're a seasoned creator or just starting. Enhance your anime art with ease and unleash your creativity like never before!

Find AI tools in YBX

Related Articles

Refresh Articles