OpenAI Claims Its New Model Achieves Human-Level Performance on 'General Intelligence' Test

Most people like

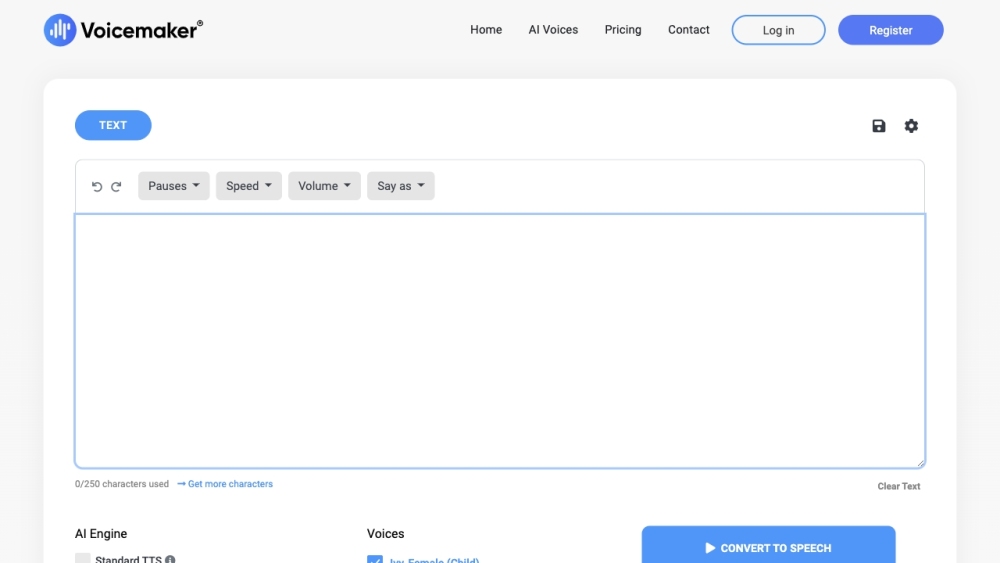

Voicemaker® transforms your text into lifelike audio with a diverse range of voice profiles and extensive customization features. Experience seamless text-to-speech technology that brings your content to life.

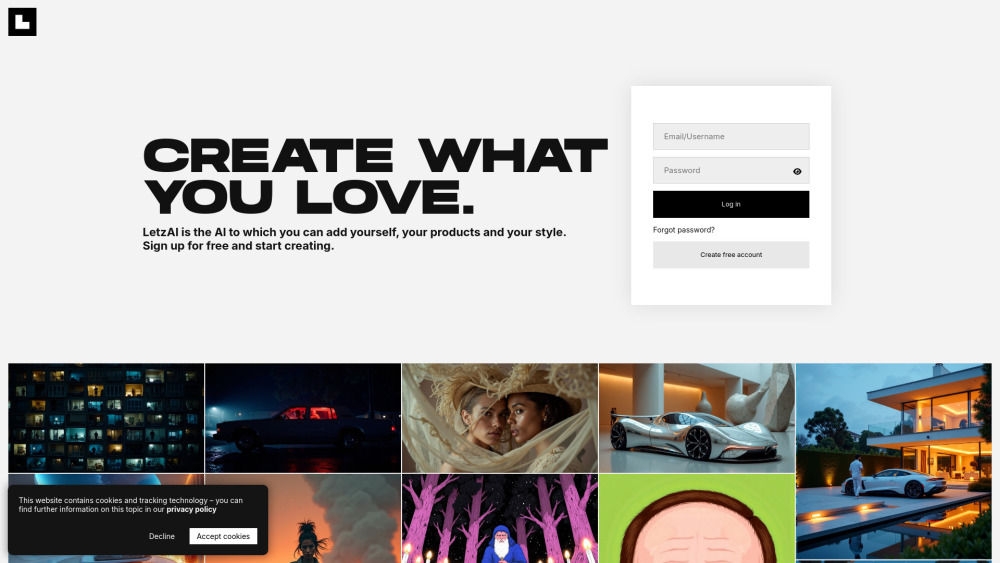

Discover a cutting-edge creative platform that harnesses the power of AI to generate stunning images tailored to your unique personal style. Unleash your imagination and transform ideas into visual masterpieces effortlessly.

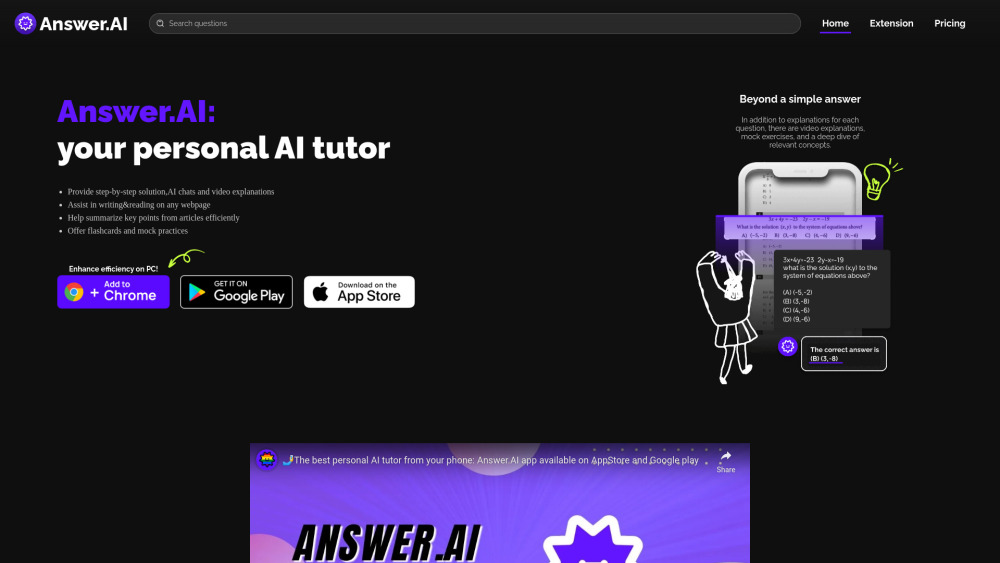

Unlock the potential of your academic journey with our innovative AI-powered homework helper app. Designed to assist students in mastering complex subjects, this tool offers personalized guidance and instant support for any homework challenges. Whether you're grappling with math problems or exploring literature, our app simplifies learning with tailored resources at your fingertips. Experience the future of education and enhance your study sessions today!

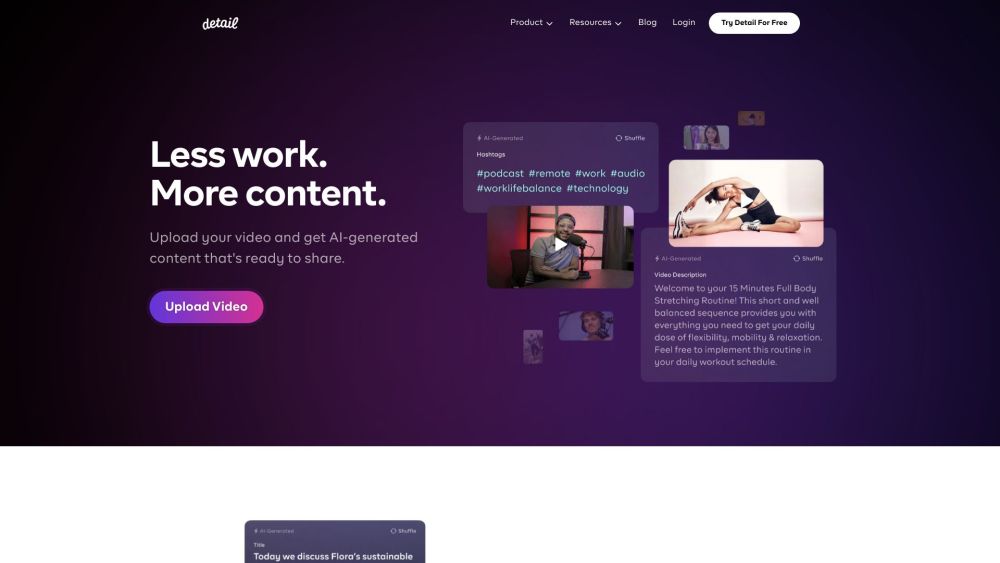

Find AI tools in YBX