Four advanced large language models (LLMs) were presented with an image depicting what appears to be a mauve-colored rock, but is actually a potentially serious tumor of the eye. The models were tasked with identifying its location, origin, and potential severity.

LLaVA-Med incorrectly identifies the malignant growth as in the inner lining of the cheek, while LLaVA suggests it is located in the breast. GPT-4V offers a vague response and fails to identify the tumor's location. In contrast, PathChat, a new pathology-specific LLM, accurately identifies the tumor as originating from the eye and notes its potential to cause vision loss.

Developed at Brigham and Women’s Hospital's Mahmood Lab, PathChat represents a significant advancement in computational pathology, functioning as a consultant for human pathologists to assist in identifying, assessing, and diagnosing tumors and serious conditions.

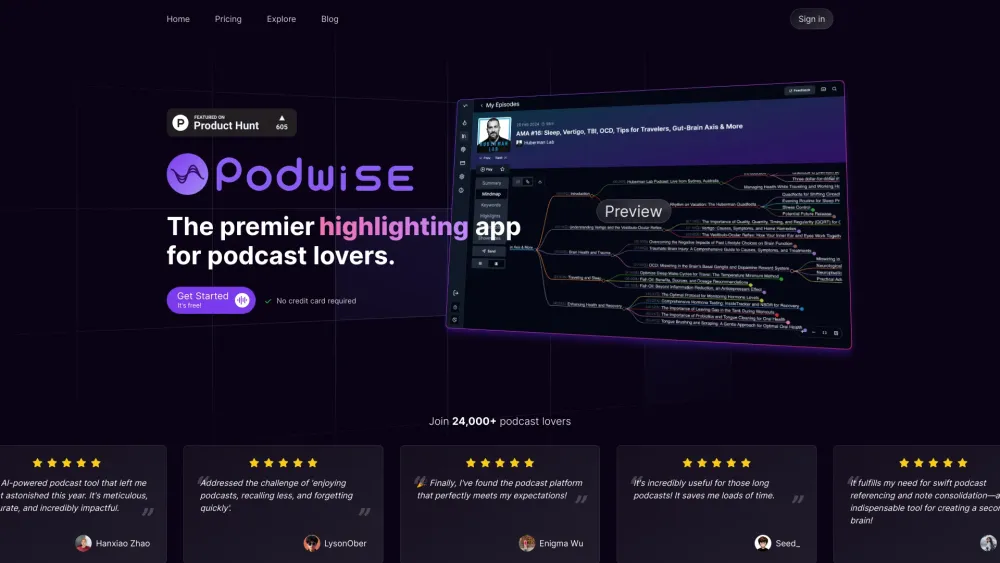

PathChat significantly outperforms leading models on multiple-choice diagnostic questions and provides clinically relevant responses to open-ended queries. It is now available through an exclusive license with Boston-based Modella AI.

“PathChat 2 is a multimodal large language model that comprehends pathology images and clinically relevant text, enabling it to engage in meaningful conversations with pathologists,” explained Richard Chen, Modella's founding CTO.

In comparison, PathChat surpasses ChatGPT-4, LLaVA, and LLaVA-Med. Researchers adapted a vision encoder for pathology, combining it with a pre-trained LLM and fine-tuning it with visual language prompts and question-answering sessions. The questions covered 54 diagnoses across 11 major pathology practices and organs.

Each evaluation used two strategies: an image combined with ten multiple-choice questions, and an image accompanied by additional clinical context, including patient sex, age, clinical history, and radiology findings.

When analyzing images from X-rays, biopsies, and other medical tests, PathChat achieved 78% accuracy with image-only data and 89.5% accuracy with additional context. The model excelled in summarizing, classifying, and captioning content while accurately answering questions that demand pathology and biomedicine knowledge.

PathChat outperformed ChatGPT-4V, open-source LLaVA, and LLaVA-Med in both evaluation settings. With image-only prompts, it scored over 52% better than LLaVA and over 63% better than LLaVA-Med. When provided clinical context, it performed 39% better than LLaVA and nearly 61% better than LLaVA-Med. Similarly, PathChat showed more than 53% improvement over GPT-4 with image-only prompts and 27% improvement with clinically contextualized prompts.

Faisal Mahmood, an associate professor of pathology at Harvard Medical School, pointed out that prior AI models in pathology were often disease-specific or focused on singular tasks, lacking adaptability for interactive use by pathologists.

“PathChat represents a step towards general pathology intelligence, serving as an AI copilot that can assist researchers and pathologists across diverse situations,” Mahmood commented.

For instance, in an image-only multiple-choice scenario, PathChat successfully identified a lung adenocarcinoma from a chest X-ray for a 63-year-old male with chronic cough and unexplained weight loss. In another instance with clinical context, it correctly identified a liver tumor as a metastasis, providing insights about possible connections to melanoma.

The model's ability to handle downstream tasks, such as differential diagnosis and tumor grading, despite not being specifically trained on labeled examples for these tasks, marks a significant shift in pathology AI development. Traditionally, model training for these tasks required a vast number of labeled examples.

PathChat could facilitate AI-assisted human-in-the-loop diagnoses, where initial assessments are refined with further context. For complex cases, such as cancers of unknown primary origin, or in low-resource settings with limited access to expert pathologists, this approach could prove invaluable.

In research, PathChat could summarize features from extensive image datasets and automate the quantification and interpretation of crucial morphological markers.

“The potential applications for an interactive, multimodal AI copilot in pathology are vast,” the researchers noted. “LLMs and generative AI are set to revolutionize computational pathology with a focus on natural language and human interaction.”

While PathChat shows promise, researchers acknowledge challenges like hallucination errors, which could be mitigated through reinforcement learning from human feedback (RLHF). Ongoing training with current medical knowledge and terminology is essential, and reinforcement through retrieval-augmented generation (RAG) could help keep its knowledge database up to date.

Further enhancements could include integrations with digital slide viewers and electronic health records, making PathChat even more beneficial for pathologists and researchers. Mahmood also suggested that the technology could extend to other medical imaging fields and data types, such as genomics and proteomics.

The research team plans to gather extensive human feedback to align the model’s performance with user expectations and improve its responses. They will also connect PathChat with clinical databases to enable it to retrieve relevant patient information for better-informed analysis.

“Our goal is to collaborate with expert pathologists across various specialties to develop evaluation benchmarks and comprehensively assess PathChat’s capabilities across diverse disease models and workflows,” Mahmood stated.