Researchers Reveal Current AI Watermarks Are Easily Removable

Most people like

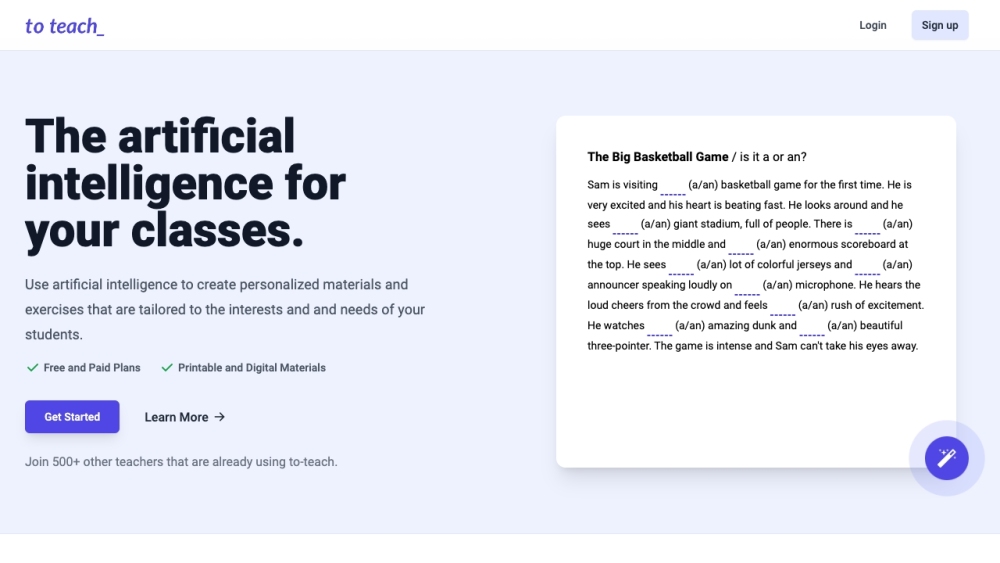

Transform your learning journey with personalized experiences in just seconds. Discover how tailored educational approaches can enhance your skills and understanding effortlessly.

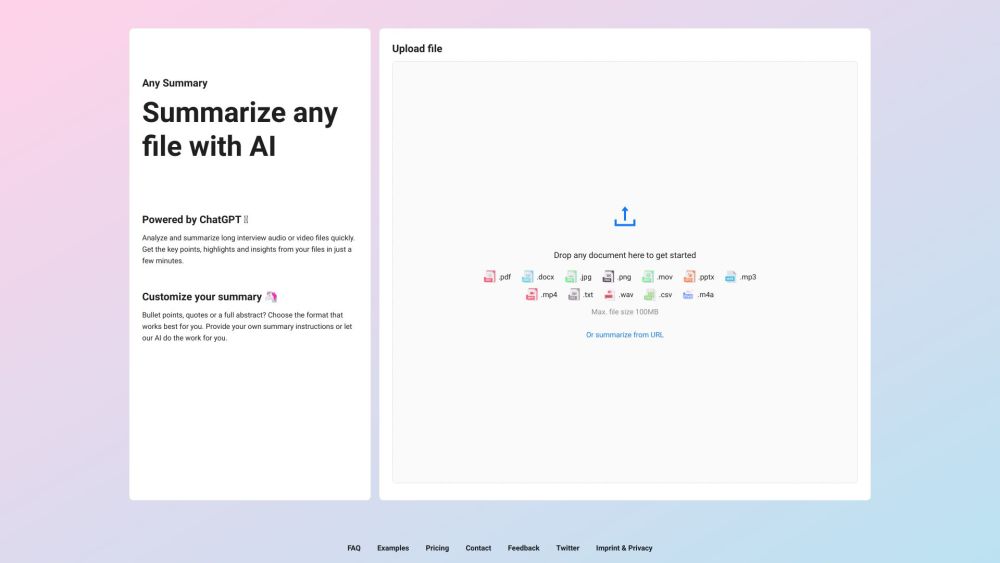

Introducing AnySummary: an innovative AI-driven tool designed to effortlessly summarize text, audio, and video content. With cutting-edge technology, AnySummary streamlines your information consumption, making it easier than ever to grasp key insights from various media formats.

HireQuotient is an innovative platform designed specifically for non-tech hiring that streamlines and automates the recruitment process from start to finish.

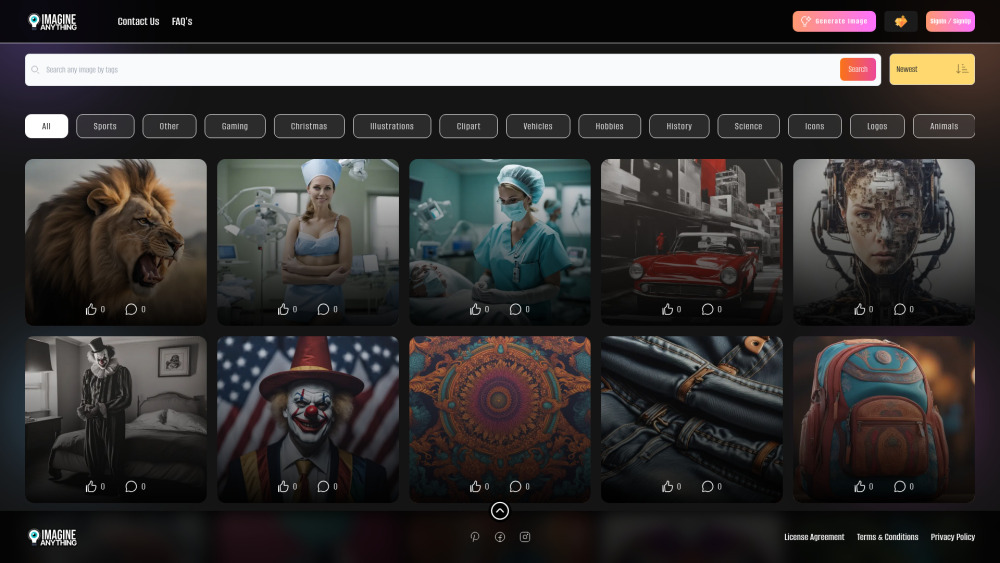

Discover the power of our free AI image generator, designed to help you effortlessly transform your ideas into stunning visuals. Whether you’re a content creator, marketer, or hobbyist, our tool provides a simple and intuitive way to generate unique images using advanced artificial intelligence. Start creating eye-catching graphics today and bring your visions to life!

Find AI tools in YBX

Related Articles

Refresh Articles