Samsung Advises Employees Against Using AI Tools Such as ChatGPT and Google Bard for Work Purposes

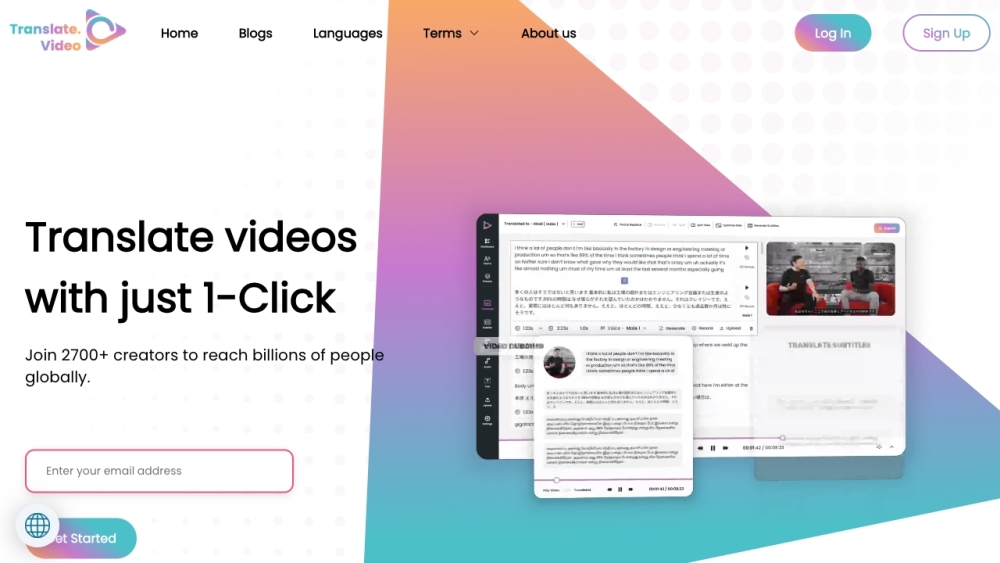

Most people like

Unlock reliable company insights with our AI-driven knowledge base. Discover accurate and trustworthy information to make informed business decisions.

NaturalReader transforms written text into spoken audio, allowing users to easily listen to their documents. This innovative tool enhances accessibility and promotes multitasking, making it a valuable resource for both students and professionals alike.

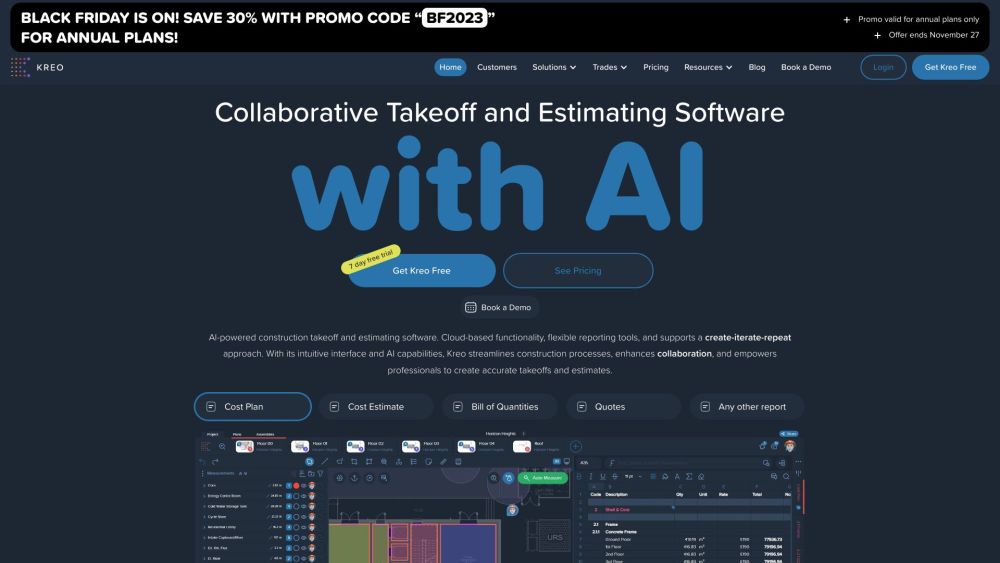

Transform your construction projects with AI-driven takeoff and estimating software to enhance efficiency and accuracy. Streamline your processes and maximize productivity today!

Find AI tools in YBX