The Rise of Small Language Models: Arcee AI's Innovative Approach

The momentum for small language models (SLMs) is intensifying, highlighted by Arcee AI's successful $24 million Series A funding—just six months after securing $5.5 million in seed funding in January 2024. Additionally, the launch of Arcee Cloud, a hosted SaaS version of their platform, complements their existing Arcee Enterprise deployment option.

Led by Emergence Capital, this funding round reflects increasing investor confidence in the efficiency of smaller AI models. “The Series A provides resources to expand our solution to the masses through our cloud platform,” said Mark McQuade, Co-Founder and CEO of Arcee AI, in an exclusive media interview.

Exploring the Potential of Small Language Models

Small language models are rapidly emerging as a preferred solution for enterprises, particularly for specialized question-answering applications. McQuade emphasizes, “For an HR-specific model, knowledge of the 1967 Academy Awards is irrelevant. We’ve seen remarkable success with models as small as 7 billion parameters.”

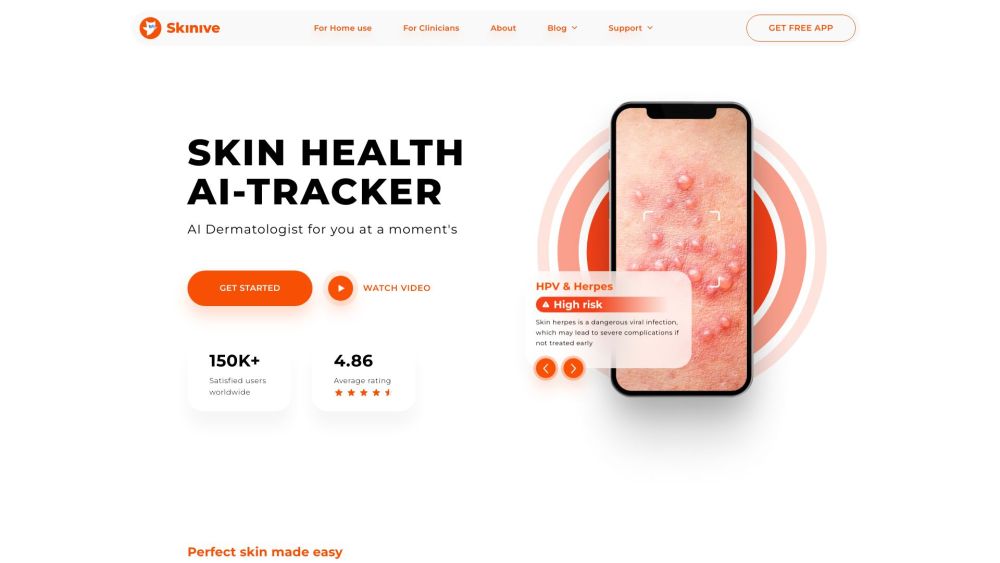

Arcee AI's applications range from tax assistance and educational support to managing HR inquiries and medical consultations. Unlike traditional data extraction, these applications prioritize delivering accurate, context-aware responses, making SLMs valuable across industries such as finance and healthcare.

Challenging the Norm: The Benefits of SLMs

As discussed in our April coverage, SLMs are redefining the “bigger is always better” mentality in AI. McQuade notes that Arcee can effectively train models similar to GPT-3 for as low as $20,000. “For business needs, larger models are unnecessary,” he explains. SLMs offer faster deployment, easy customization, and can run on less powerful hardware while reducing security risks and hallucinations in specialized tasks.

Tech giants like Microsoft and Google are advancing SLM technologies, disputing the idea that massive scale is a prerequisite for effective AI. Microsoft’s Phi series includes models with 2.7 billion to 14 billion parameters, while Google’s Gemma series offers variants that run on standard consumer hardware, emphasizing accessibility to advanced AI capabilities.

Arcee AI's Unique Edge in the Market

While Microsoft and Google focus on general-purpose models, Arcee AI specializes in domain-specific models tailored for enterprises. “We empower organizations to expand beyond a single high ROI use case,” McQuade says. “With our efficient models, they can tackle multiple applications—10 or even 20.”

This strategy caters to the rising demand for cost-effective and energy-efficient AI solutions. Arcee’s Model Merging and Spectrum technologies aim to provide these advantages while allowing greater customization compared to off-the-shelf models.

Innovative Techniques: Model Merging and Spectrum

Arcee’s approach includes Model Merging, a technique that integrates multiple AI models into a single, enhanced model without increasing its size. “We view model merging as the next iteration of transfer learning,” explains McQuade. For instance, merging two 7 billion parameter models results in one hybrid model still containing 7 billion parameters, enhancing capabilities while maintaining efficiency.

Meanwhile, Arcee’s Spectrum technology improves training efficiency by targeting specific model layers based on their signal-to-noise ratio. “Spectrum optimizes training time by up to 42% while preventing catastrophic forgetting,” says Lucas Atkins, Research Engineer at Arcee AI. This selective training method reduces resource requirements, making it easier for businesses to create custom AI models.

Expanding Access through Innovative Pricing Models

“We offer annual contracts, a unique approach in this space,” McQuade states, moving away from the typical usage-based pricing model common in AI services. Pricing is based on software licensing, providing flexibility for scalability.

Arcee AI offers two primary product lines:

1. Model Training Tools: Enable customers to develop their own models.

2. Pre-Trained Models: Available for customers using Arcee software.

Their delivery methods include:

- Arcee Cloud: A SaaS solution for training and merging models.

- Arcee Enterprise: For deployment within customer Virtual Private Clouds (VPC).

The annual contract model supports growth, allowing for the addition of new models as customers’ needs evolve. Currently generating $2 million in revenue, Arcee AI aims to become profitable by early 2025 and plans to double its employee count within 18 months.

Empowering Agile AI Development

The true strength of SLMs lies not only in their focus on specific domains but also in enabling businesses to experiment, learn, and optimize continuously. Rapid iteration and model development at a lower cost might soon define successful AI adoption.

This evolving landscape may lead organizations to view AI models as dynamic systems that adapt and evolve, reflecting the agile methodologies standard in software development today. Companies utilizing SLMs can quickly adjust to user needs while exploring multiple applications without significant financial risks.

If Arcee successfully delivers efficient, customizable SLMs, it could capitalize on the current shift towards agility in AI development, positioning itself as a formidable player in the industry. The coming months will unveil whether small language models can indeed reshape AI development strategies.