Synthesia Advances AI Video Technology with New 'Expressive Avatars' for Enhanced Engagement

Most people like

Introduction to AI Agents for Web Data Extraction

In the era of big data, extracting valuable information from the web has become essential for businesses and researchers alike. AI agents are revolutionizing this process by automating web data extraction, enabling users to gather insights efficiently and accurately. By harnessing advanced algorithms and machine learning techniques, these intelligent agents streamline the task of sifting through vast amounts of online information, transforming raw data into actionable intelligence. Explore how AI agents are changing the landscape of web data extraction and the numerous benefits they offer to organizations in today’s digital world.

Unlock the power of LinkedIn email extraction and harness tailored outreach strategies using ChatGPT. Elevate your networking game and connect with your target audience effectively!

Upscalepics is a free online tool designed to enhance and manipulate images with ease and precision. Perfect for anyone looking to improve image quality, this user-friendly platform offers powerful features for stunning visual transformations.

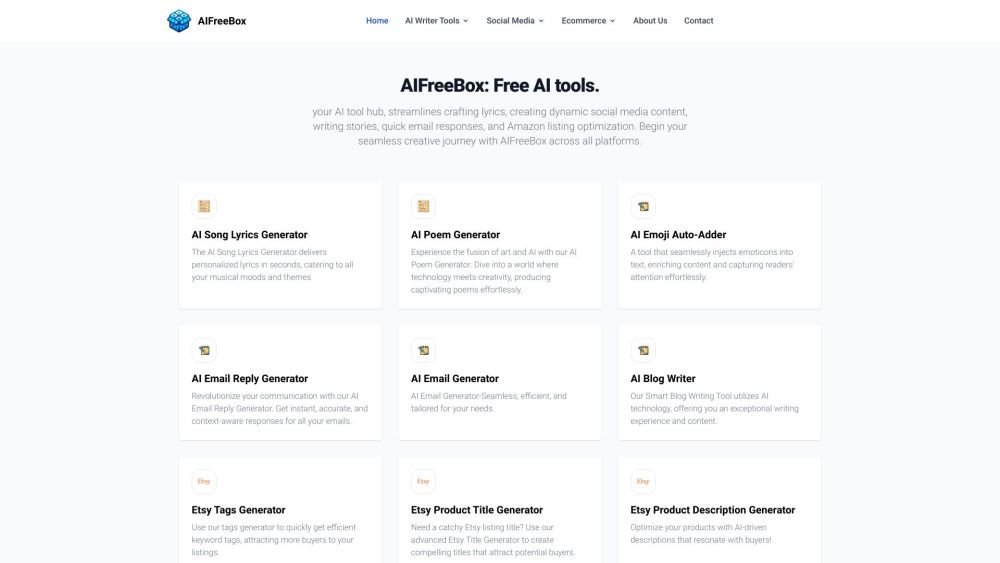

Discover the ultimate AI tool hub designed specifically for creative endeavors. Explore a diverse range of innovative tools that empower you to enhance your artistic projects, streamline your workflow, and unlock your creative potential. Whether you’re a designer, writer, or content creator, our platform offers everything you need to elevate your work and inspire your imagination.

Find AI tools in YBX

Related Articles

Refresh Articles