Together AI Unveils Enterprise AI Platform for Private Cloud: Achieve Faster Inference and Lower Costs

Most people like

Introducing CodeDesign.ai, an innovative AI website builder designed to effortlessly create visually appealing and functional websites. Whether you're a beginner or a seasoned developer, our platform streamlines the website creation process, allowing you to focus on what truly matters—your content. Elevate your online presence with CodeDesign.ai today!

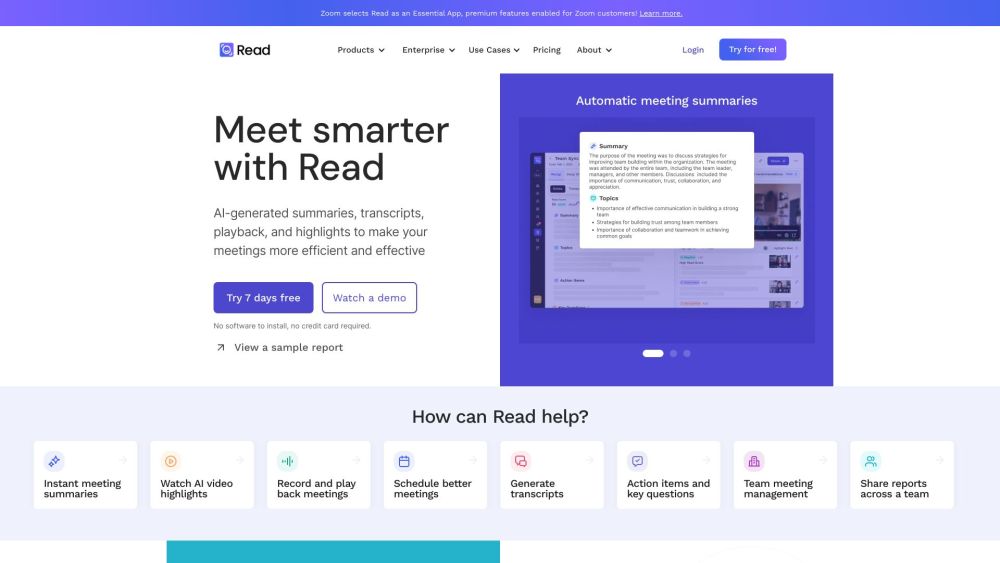

Introducing Read: Your Partner in Enhancing Meeting Wellness through Smart Scheduling, In-Depth Analytics, Concise Summaries, and Tailored Recommendations! Experience a transformative approach to meetings that prioritizes productivity and well-being.

Discover the power of Ethical Data Labeling Outsourcing for AI Models. In today's competitive landscape, accurate and ethical data labeling is crucial for developing advanced artificial intelligence solutions. Companies are increasingly turning to outsourcing for efficient and reliable labeling services that adhere to ethical standards. This introduction provides insights into the benefits of such outsourcing and its impact on AI model performance and compliance.

Discover the ultimate personalized AI music companion designed to enhance your listening experience. This innovative tool tailors music recommendations to your unique tastes, creating a custom soundtrack just for you. With advanced algorithms, it learns from your preferences and provides curated playlists that resonate with your mood, ensuring every note is perfectly suited to your vibe. Embrace the future of music with an AI companion that evolves alongside your musical journey, making every listening session uniquely yours.

Find AI tools in YBX

Related Articles

Refresh Articles