Top AI Companies Commit to Ensuring Children's Online Safety Worldwide

Most people like

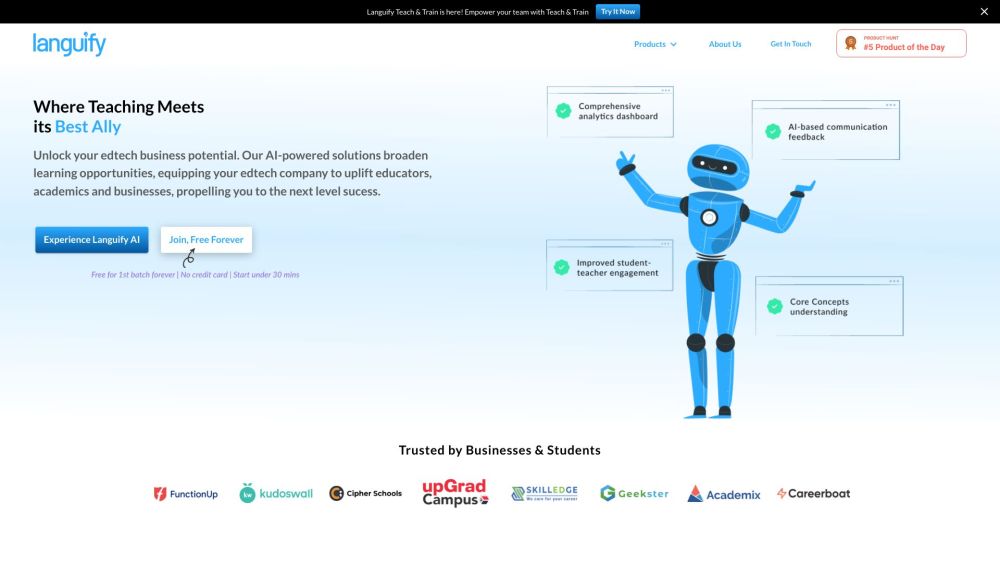

Introducing an AI learning companion designed to enhance personalized educational interactions. This innovative technology tailors the learning experience to meet individual needs, fostering engagement and improving educational outcomes. Whether you're a student seeking support or an educator aiming to provide customized instruction, our AI learning companion is here to transform the way you learn and teach.

The AI Image Generator harnesses advanced AI technology to produce a diverse range of image variations. This innovative tool empowers users to explore unique visual interpretations effortlessly, perfect for artists, designers, and creative enthusiasts. Discover the endless possibilities with AI-generated images today!

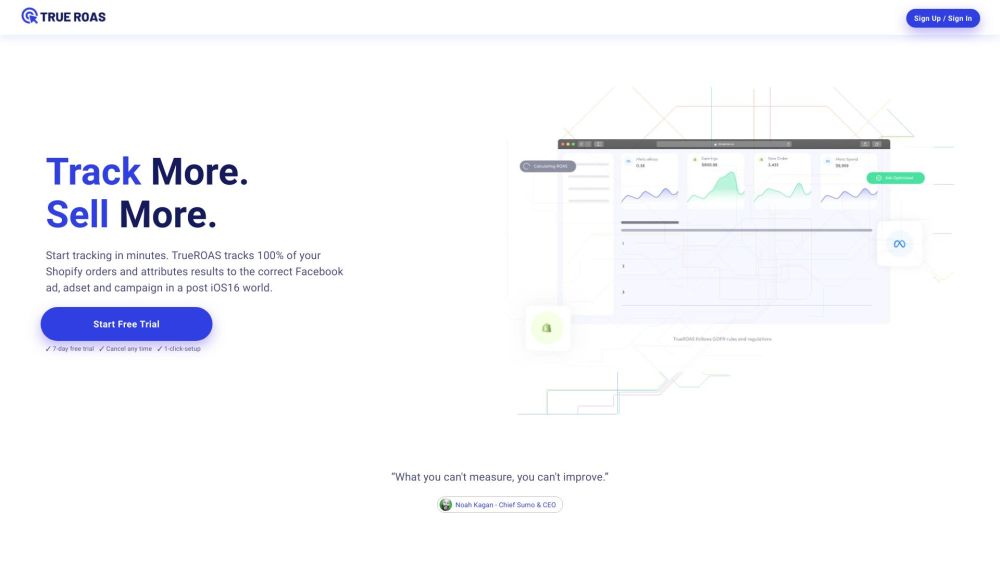

Effective ad tracking and attribution for Shopify orders is essential for maximizing your marketing ROI. By employing precise tracking methods, you can gain valuable insights into customer behavior and optimize your advertising strategies accordingly. This guide explores the best practices for accurately tracking ad performance on your Shopify platform, helping you make informed decisions that boost sales and enhance overall business growth.

Revolutionize your study sessions with our AI tutor, designed for instant homework assistance. Get accurate answers, clarifications, and guidance on your assignments, making learning more efficient and engaging. Whether you're tackling complex math problems or researching science topics, our AI tutor is here to support you every step of the way. Experience the future of education with instant help at your fingertips.

Find AI tools in YBX

Related Articles

Refresh Articles