Uber AI Demonstrates 'Superhuman' Skill in Every Atari 2600 Game

Most people like

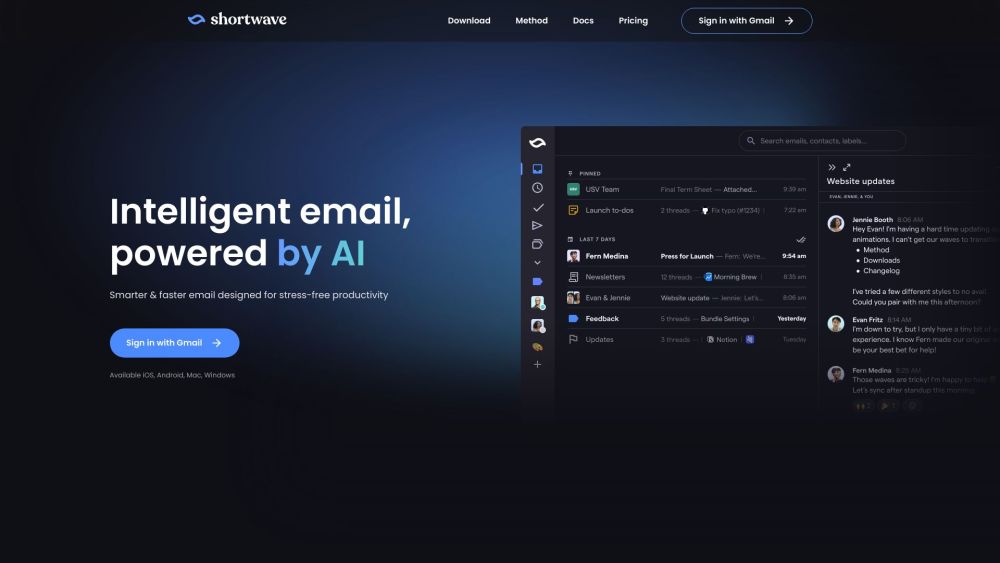

Shortwave is an AI-driven email service designed specifically for professionals to enhance productivity and eliminate stress.

MathGPT: AI-powered math tool offering step-by-step solutions for all levels, helping users master math concepts.

Unlock Your Potential with AI-Powered Interview Preparation and Feedback

Are you ready to ace your next job interview? Our AI-driven platform offers tailored interview preparation and insightful feedback to boost your confidence and enhance your performance. By leveraging cutting-edge technology, we help you refine your responses and develop key skills to stand out in competitive job markets. Whether you're a recent graduate or a seasoned professional, our tools equip you to succeed in any interview situation. Boost your chances of landing your dream job today!

Find AI tools in YBX

Related Articles

Refresh Articles