xAI Opens Up Grok's Base Model to the Public—Training Code Not Included

Most people like

Introducing your AI-powered virtual health assistant, tailored to provide personalized recommendations and support. This innovative tool combines advanced artificial intelligence with a wealth of health expertise, ensuring you receive accurate advice and guidance for your well-being. Discover how our virtual health assistant can empower your health journey today!

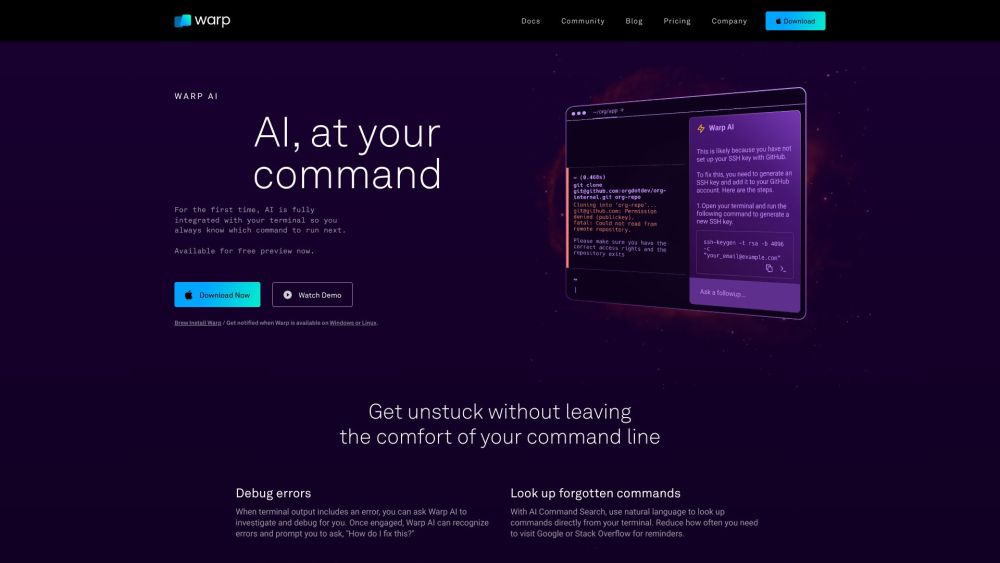

The innovative terminal enhanced with AI technology to supercharge your software development experience.

Unlock seamless access to over 100 AI models through a single API, enabling continuous innovation 24/7.

Find AI tools in YBX

Related Articles

Refresh Articles