Xbox's Latest Transparency Report Reveals Insights on AI Implementation for Enhanced Player Safety

Most people like

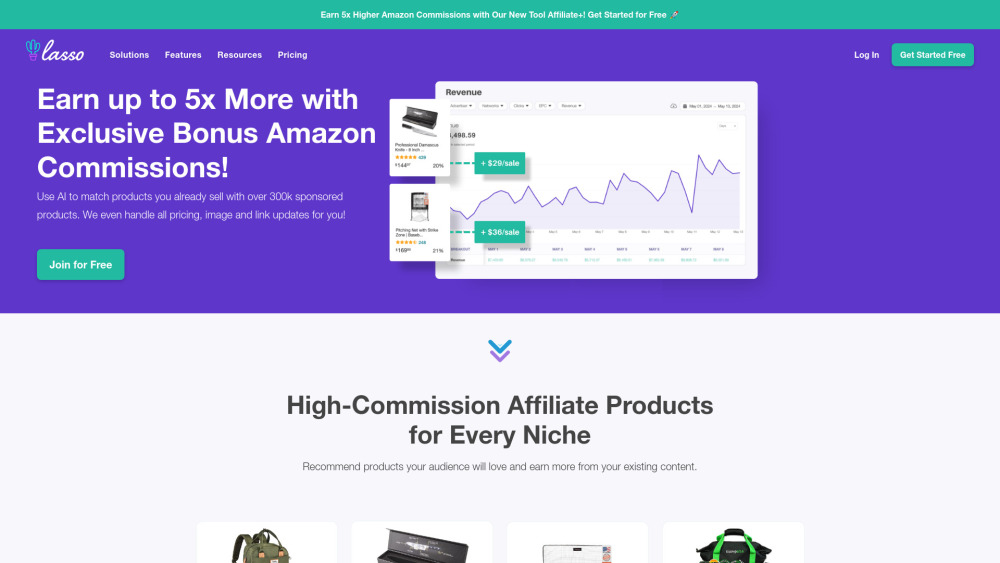

Welcome to our AI-driven affiliate marketing platform, where innovation meets opportunity. Our cutting-edge technology empowers businesses and marketers to optimize their affiliate strategies, driving sales and boosting engagement. By harnessing the power of artificial intelligence, we analyze data in real-time, enabling targeted campaigns that convert. Whether you're a seasoned marketer or new to affiliate marketing, our platform offers the tools and insights you need to maximize your success in the digital marketplace. Discover how our AI-driven solutions can transform your affiliate marketing efforts today!

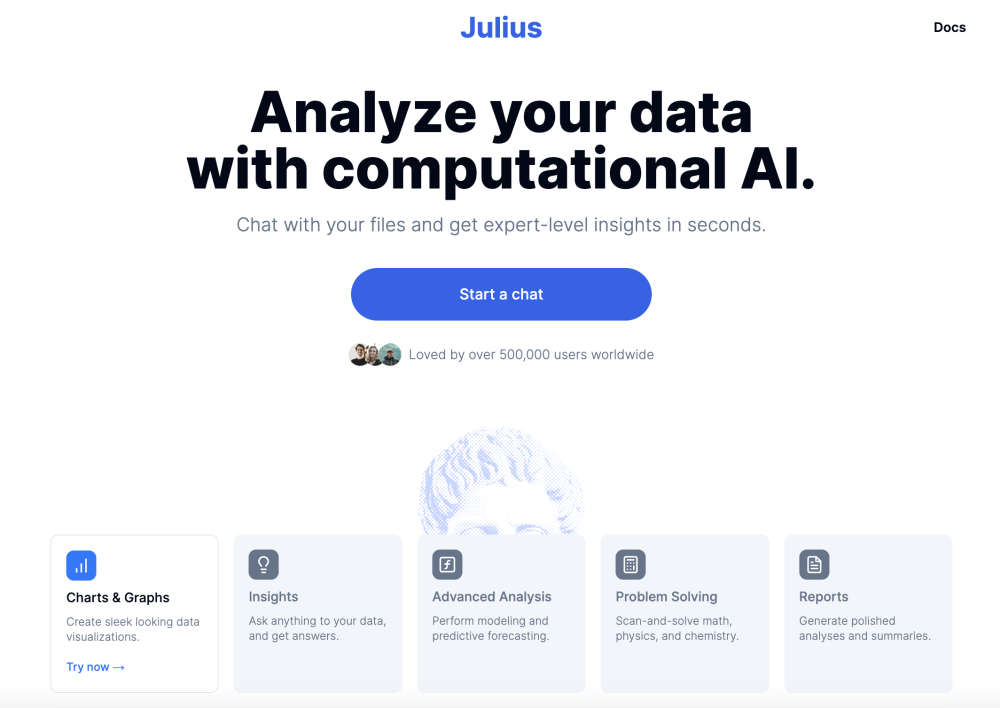

Unlock the power of AI-driven data analysis and visualization with our innovative AI data analyst toolkit. Designed to streamline your data exploration process, this tool transforms complex datasets into engaging visual insights, empowering you to make informed decisions. Discover how our AI data analyst can elevate your analytics game by enhancing your ability to interpret data and drive business success.

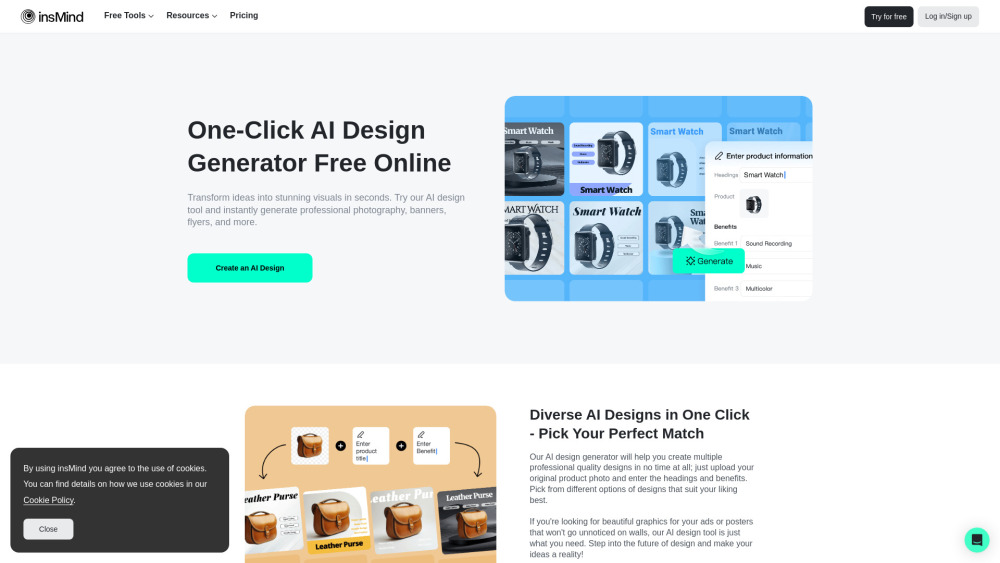

Unlock Your Creativity with insMind AI Design Generator – a powerful tool that allows you to create professional-quality graphic designs for free. With a single click, you can effortlessly generate outstanding AI-driven designs specifically crafted for marketing, promotion, and business needs, saving you the hassle and expense of hiring a designer. Start creating eye-catching designs with AI today!

Find AI tools in YBX