Analyzing Microsoft's Copilot AI: Understanding Erratic Responses and the PUA User Phenomenon

Most people like

Unlock the full potential of your videos and audio recordings by repurposing them into captivating content. This guide will show you how to effectively convert your multimedia into blog posts, social media updates, podcasts, and more. By leveraging existing assets, you can expand your reach, engage your audience, and maximize your content's impact. Discover the art of content transformation and make your multimedia shine.

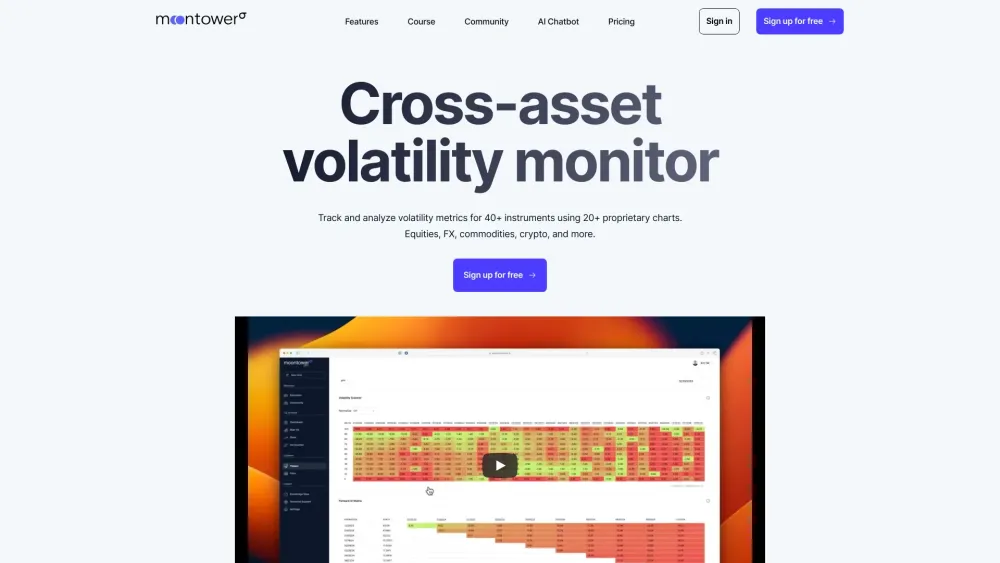

Introducing the ultimate volatility monitor for options traders: a comprehensive cross-asset analysis tool designed to enhance your trading strategy and decision-making. Stay informed with real-time insights into market fluctuations across various asset classes, enabling you to seize opportunities and mitigate risks effectively.

Typli.AI is an innovative AI-driven platform designed specifically for digital marketers and content creators. It simplifies content generation and enhances optimization, empowering users to produce high-quality material effortlessly.

Find AI tools in YBX