Apple Unveils Groundbreaking MM1 Multimodal AI Model, Ushering in a New Era of Artificial Intelligence

Most people like

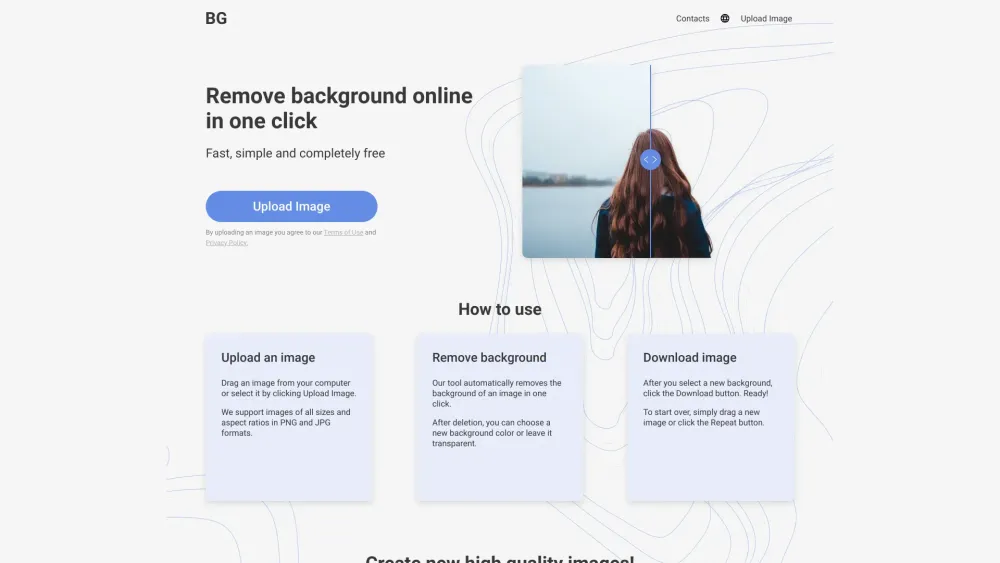

Easily remove the background from your images with just a single click! Whether you're enhancing photos for personal projects or professional presentations, our user-friendly tool simplifies the editing process, allowing you to achieve stunning results in no time. Say goodbye to complicated software and hello to effortless image editing!

Discover the power of our AI News Digest and Summary Tool, designed to keep you updated with the latest news effortlessly. This cutting-edge tool harnesses the capabilities of artificial intelligence to curate and summarize news articles, providing you with concise and relevant information tailored to your interests. Stay informed without the overwhelm, and enhance your reading experience with curated insights from the world of news.

Introducing an AI-driven SaaS platform designed to enhance and scale your digital product sales effectively.

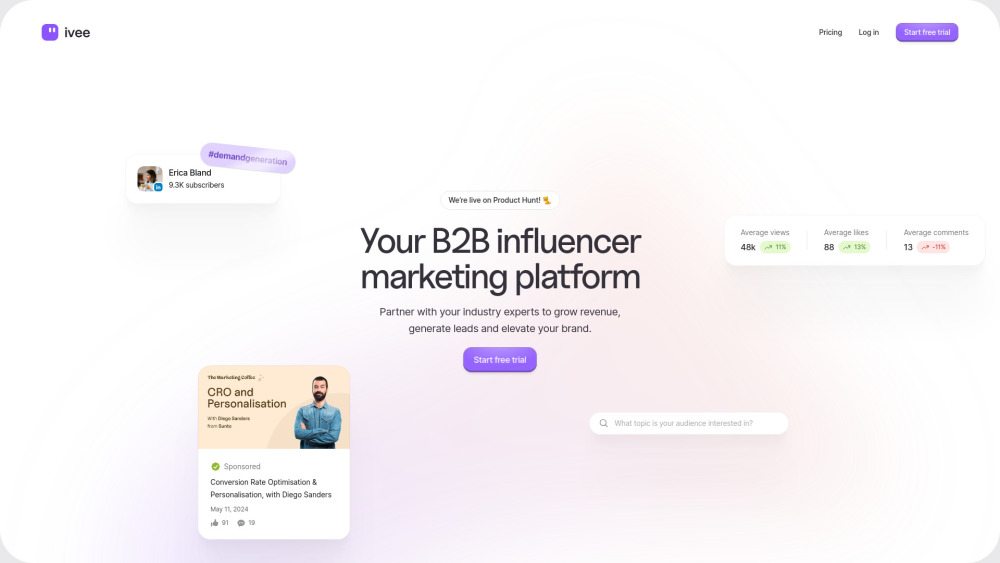

In today's digital landscape, B2B influencer marketing platforms have emerged as powerful tools for businesses aiming to enhance their brand visibility and credibility. By partnering with industry leaders and influencers, companies can effectively engage their target audience, build trust, and drive conversions. This article explores the key benefits and strategies for leveraging B2B influencer marketing platforms to elevate your marketing efforts and achieve sustainable growth. Discover how these platforms can transform your approach to reaching clients and generating leads in a competitive marketplace.

Find AI tools in YBX