Apple Unveils New AI Assistant with Screen Understanding and Voice Response Features

Most people like

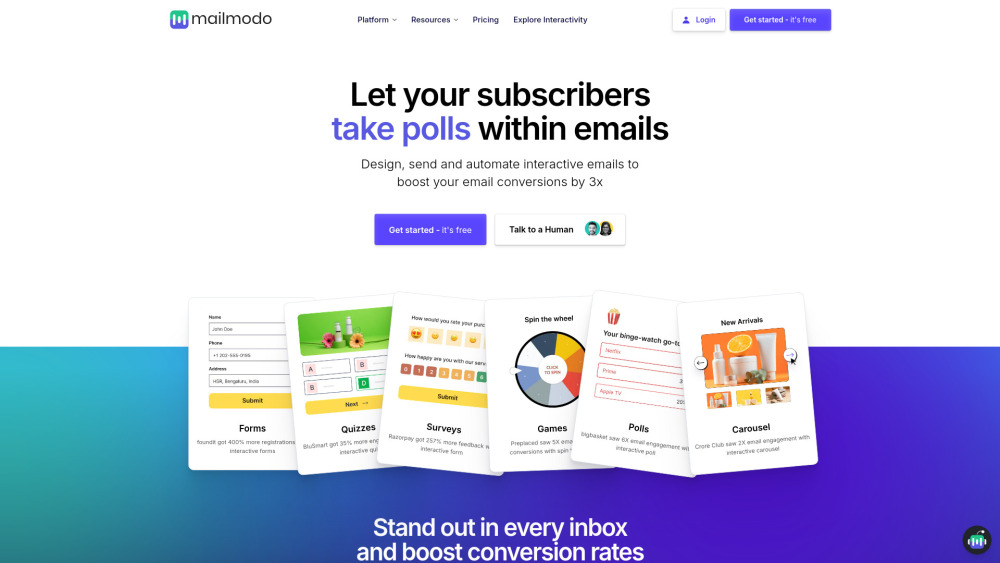

Discover the power of our email marketing platform, designed to help you craft interactive emails that significantly enhance audience engagement. With our user-friendly tools and features, you can create visually stunning campaigns that captivate your subscribers and drive better results.

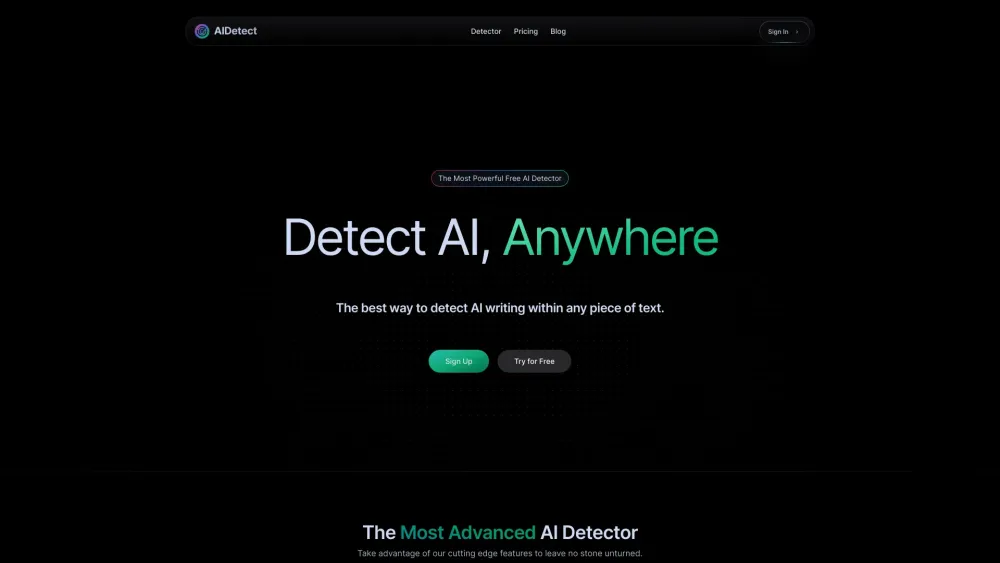

Detecting AI writing probability is becoming increasingly important as artificial intelligence tools evolve in sophistication. By understanding how to identify AI-generated text, we can enhance our ability to discern authentic human expression from machine-generated content. This guide will explore effective strategies and methods for assessing the likelihood that a piece of writing was produced by AI, equipping you with the skills to navigate this rapidly changing landscape. Whether you're a content creator, educator, or simply curious, these insights will empower you to critically evaluate the information you consume.

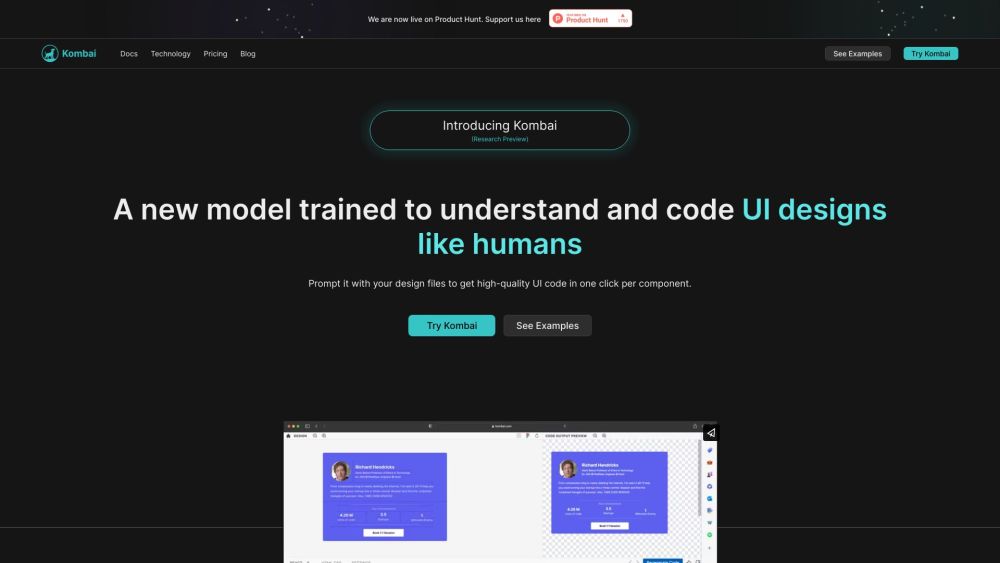

Kombai is an innovative AI-driven tool designed to seamlessly transform Figma designs into precise front-end code. Experience the future of design-to-code conversion with unparalleled accuracy and efficiency.

Discover the ultimate web development framework tailored for modern applications. This innovative framework empowers developers to create dynamic, responsive, and feature-rich experiences that engage users and enhance functionality. Whether you're building a simple website or a complex web application, this framework provides the tools you need to succeed in today's digital landscape.

Find AI tools in YBX