OpenAI Unveils Sora: The Revolutionary Video Generation Model Reshaping the Future of the Film Industry

Most people like

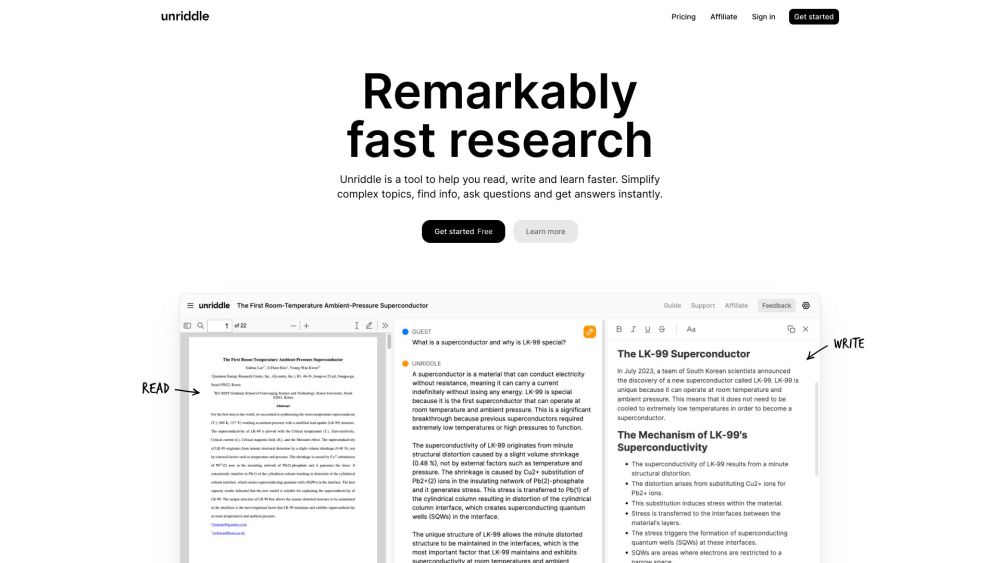

Unriddle transforms intricate documents into user-friendly formats, enhancing reading, writing, and learning experiences for quicker understanding.

Introducing an AI-driven platform designed for instant question answering and engaging conversations. Experience the future of dialogue, where artificial intelligence seamlessly facilitates real-time interactions and provides accurate answers at your fingertips.

Attract genuine Twitter followers with ease! Discover proven strategies to effortlessly grow your online presence and engage with a real audience on Twitter.

Find AI tools in YBX

Related Articles

Refresh Articles