The OpenAI competitor Anthropic recently set a new standard for transparency in the generative AI industry by publicly releasing system prompts for its Claude family of AI models. Industry observers highlight this as a significant move towards shedding light on the inner workings of AI systems.

System prompts serve as the operating instructions for large language models (LLMs), outlining the general guidelines these models should follow during user interactions. They also indicate the knowledge cut-off date for the information utilized in the model’s training.

While many LLMs use system prompts, not all AI companies share this information publicly, leading to a growing trend of AI "jailbreakers" attempting to uncover them. Anthropic has preemptively outpaced these efforts by posting the operating instructions for its Claude 3.5 Sonnet, Claude 3 Haiku, and Claude 3 Opus models on its website in the release notes section.

Additionally, Alex Albert, Anthropic’s Head of Developer Relations, committed on X (formerly Twitter) to keeping the public informed about updates to Claude's system prompts, stating, “We’re going to log changes we make to the default system prompts on Claude dot ai and our mobile apps.”

Insights from Anthropic’s System Prompts

The system prompts for Claude 3.5 Sonnet, Claude 3 Haiku, and Claude 3 Opus reveal valuable information about each model’s capabilities, knowledge cut-off dates, and unique personality traits.

- Claude 3.5 Sonnet is the most advanced model, with a knowledge base updated as of April 2024. It adeptly provides detailed answers to complex inquiries while delivering concise responses to simpler questions. This model treats controversial subjects with caution, presenting information without labeling it as sensitive or claiming neutrality. Notably, it avoids filler phrases and never acknowledges recognizing faces in image inputs.

- Claude 3 Opus, updated as of August 2023, excels in managing intricate tasks and writing. Like Sonnet, it offers concise answers for basic queries while providing thorough responses to complex questions. Opus addresses controversial topics with a range of perspectives, steering clear of stereotyping and ensuring balanced views. However, it lacks some of the detailed behavioral guidelines seen in Sonnet, such as minimizing apologies and affirmations.

- Claude 3 Haiku is the quickest member of the Claude family, also updated as of August 2023. It prioritizes delivering fast, concise responses for simple inquiries and thorough answers for more complex issues. Its prompt structure is straightforward, focusing on speed and efficiency without the advanced behavioral nuances found in Sonnet.

The Importance of Transparency in AI

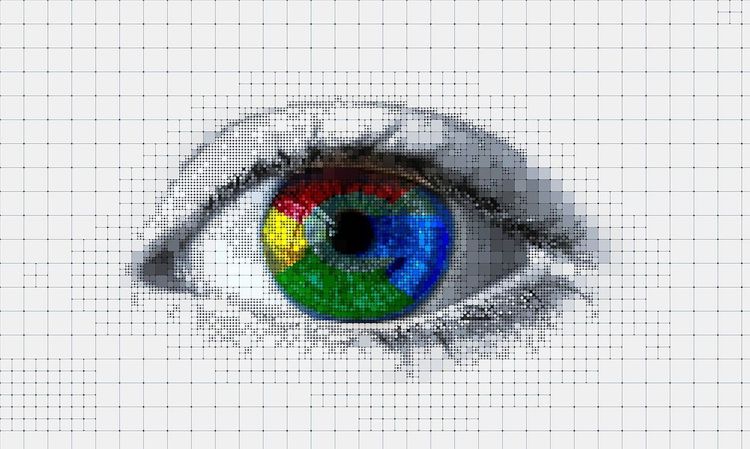

One of the main criticisms of generative AI systems is the "black box" phenomenon, which obscures the reasoning behind model decisions. This issue has spurred research into AI explainability to enhance understanding of how models make predictions. By making system prompts publicly accessible, Anthropic takes a step towards addressing this transparency gap, allowing users to comprehend the rules governing the behaviors of the models.

Anthropic's release has been well-received by the AI development community, emphasizing a move toward improved transparency among AI firms.

Limitations on Openness

Despite releasing system prompts for the Claude models, Anthropic has not made the entire model open source. The actual source code, training datasets, and model weights remain proprietary to Anthropic. Nevertheless, this initiative showcases a path for other AI companies to enhance transparency, benefiting users by clarifying how their AI chatbots are designed to function.