Apple to Revise AI Feature Following Inaccurate News Alerts

Most people like

Introducing a cutting-edge language model that crafts text indistinguishable from that written by humans. This advanced technology enhances content creation, delivering high-quality, engaging written material with ease.

Upscalepics is a free online tool designed to enhance and manipulate images with ease and precision. Perfect for anyone looking to improve image quality, this user-friendly platform offers powerful features for stunning visual transformations.

In today's fast-paced world, an AI assistant can revolutionize the way you approach everyday tasks. From managing schedules to providing personalized recommendations, these intelligent tools are designed to enhance your productivity and streamline your workflow. Embrace the power of AI and discover how it can simplify your life while tackling a multitude of responsibilities. Explore the endless possibilities of an AI assistant tailored to meet your needs.

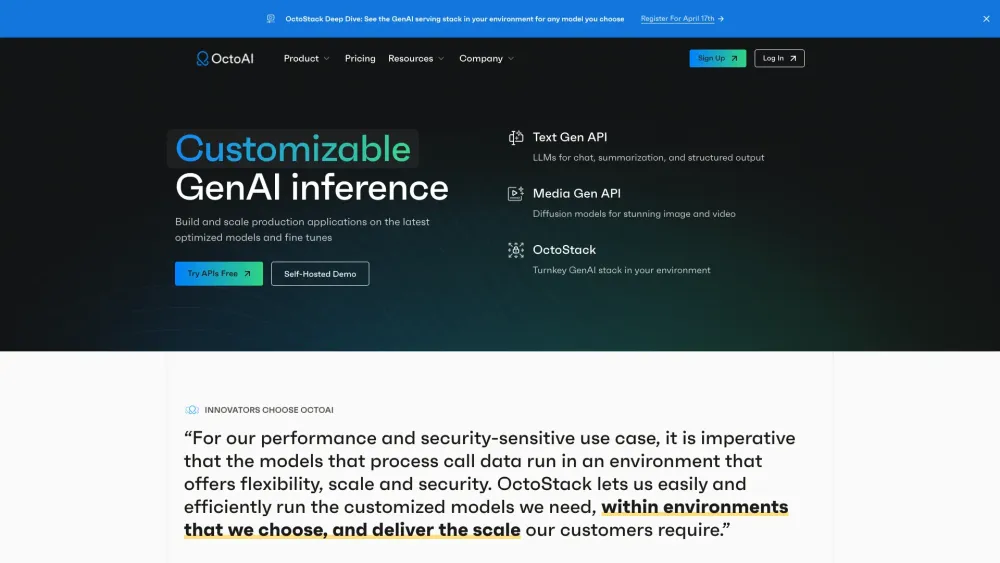

Introducing a cloud-based platform designed specifically for generative AI applications. This innovative solution harnesses the power of the cloud to streamline processes, enhance creativity, and drive efficiency in AI development. Explore how our platform can transform your projects and unlock new possibilities in the generative AI landscape.

Find AI tools in YBX