Cerebras and G42 have officially launched Condor Galaxy 3, a groundbreaking AI supercomputer that promises a remarkable performance of eight exaFLOPs.

This impressive capability is powered by 58 million AI-optimized cores, according to Andrew Feldman, CEO of Cerebras, based in Sunnyvale, California. G42, a prominent player in cloud and generative AI solutions in Abu Dhabi, UAE, will harness this supercomputer to deliver cutting-edge AI technology. Feldman emphasized that this will be one of the world's largest AI supercomputers.

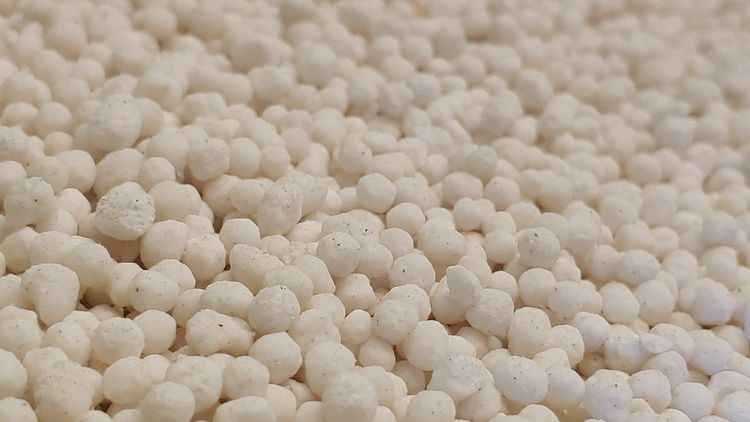

Condor Galaxy 3 features 64 Cerebras CS-3 systems, utilizing the Wafer-Scale Engine 3 (WSE-3), which is touted as the fastest AI chip in the industry. This robust configuration enables the computer to achieve its astounding performance metrics while efficiently training gigantic AI models.

“Our approach involves building vast, high-speed AI supercomputers, evolving from smaller clusters to larger systems capable of training substantial models,” Feldman explained.

Cerebras takes a unique approach to chip design, integrating multiple cores across a semiconductor wafer, thus enhancing communication speed and overall efficiency. This innovative methodology allows them to place 900,000 cores on a single wafer.

Located in Dallas, Texas, Condor Galaxy 3 is the third addition to the Condor Galaxy network. The collaboration between Cerebras and G42 has already produced two earlier models, each delivering eight exaFLOPs, bringing the combined total to 16 exaFLOPs. By the end of 2024, the Condor Galaxy network will exceed 55 exaFLOPs in AI computing power, with plans for nine AI supercomputers overall.

Kiril Evtimov, Group CTO of G42, expressed his enthusiasm: “Condor Galaxy 3 strengthens our shared vision to revolutionize the global landscape of AI computing. Our existing network has already trained leading open-source models, and we anticipate even greater innovations with this enhanced performance.”

The 64 Cerebras CS-3 systems in Condor Galaxy 3 are powered by the WSE-3, a cutting-edge 5-nanometer AI chip that delivers twice the performance at equal power and cost as its predecessor. Packed with four trillion transistors, the WSE-3 achieves a peak performance of 125 petaflops using 900,000 AI-optimized cores per chip.

“We are proud to introduce our CS-3 systems, pivotal to our collaboration with G42,” Feldman stated. “With each subsequent iteration of the Condor Galaxy, we will scale our processing capabilities from 36 exaFLOPs to over 55 exaFLOPs, marking a significant advancement in AI computing.”

Condor Galaxy has already trained various generative AI models, including Jais-30B and Med42. The former is recognized as the top bilingual Arabic model globally, now available on Azure Cloud, while Med42, created in partnership with M42 and Core42, excels as a clinical language model.

Scheduled for launch in Q2 2024, Condor Galaxy 3 is set to enhance the capabilities of AI systems.

Additionally, Cerebras has announced the WSE-3 chip, which establishes a new record as the fastest AI chip to date. The WSE-3 maintains the same power consumption while offering double the performance of the previous WSE-2 model, solidifying its position at the forefront of AI technology.

Feldman noted that the CS-3 requires 97% less code than GPUs, making it exceptionally user-friendly. The system can efficiently train models ranging from 1 billion to 24 trillion parameters, drastically simplifying training workflows.

Cerebras has already secured a strong order backlog for the CS-3 from various sectors, including enterprise and government.

Rick Stevens, Associate Laboratory Director at Argonne National Laboratory, remarked on the transformative potential of Cerebras solutions in scientific and medical AI research.

Furthermore, Cerebras has entered a strategic collaboration with Qualcomm to leverage the strengths of both companies. This partnership aims to deliver ten times the performance in AI inference via optimized model training on the CS-3.

Feldman highlighted, “This collaboration with Qualcomm allows us to train models optimized for their inference engine, thereby significantly reducing inference costs and time to ROI.”

With over 400 engineers on board, Cerebras is committed to delivering unparalleled computing power to tackle the most pressing challenges in AI today.