Could an Accurate ChatGPT Watermarking Tool Exist? OpenAI's Decision to Withhold It Explained

Most people like

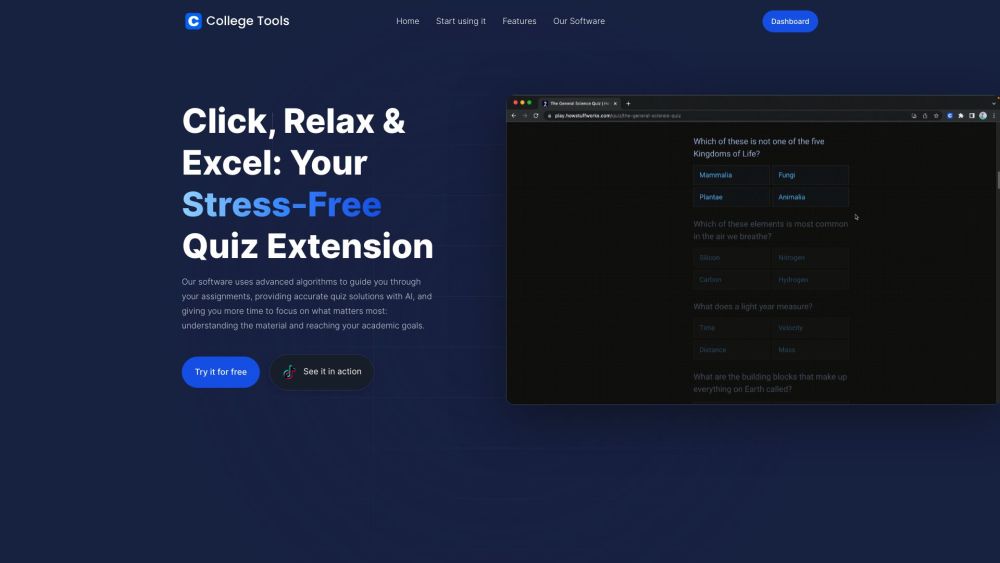

Introducing College Tools, your ultimate AI-powered exam assistant designed specifically for students. With its advanced technology, College Tools helps you streamline your study process and enhance your exam performance.

Effortlessly transform your YouTube videos into engaging TikTok clips, YouTube Shorts, and Instagram Reels with Klap—a cutting-edge AI-powered tool that streamlines content creation in just one click.

Validating Your Startup Idea and Crafting an AI-Driven Business Plan

In today's competitive landscape, ensuring your startup idea resonates is crucial for success. By leveraging innovative AI tools, you can efficiently validate your concept and create a comprehensive business plan that stands out.

Engage in meaningful conversations with your documents and PDFs! Effortlessly ask questions, extract insights, and explore content like never before. With our innovative tool, transforming static information into interactive dialogue has never been easier, allowing you to enhance your understanding and productivity. Dive into your files and unlock their full potential today!

Find AI tools in YBX