Discover the Benefits of GPT-4o Advanced Voice Mode: Introducing Hume’s EVI 2 with Emotionally Inflected Voice AI and API Solutions

Most people like

AI Excel Tools: Discover advanced data visualization techniques and AI-driven solutions designed to enhance your Excel and Google Sheets workflows for increased productivity and efficiency.

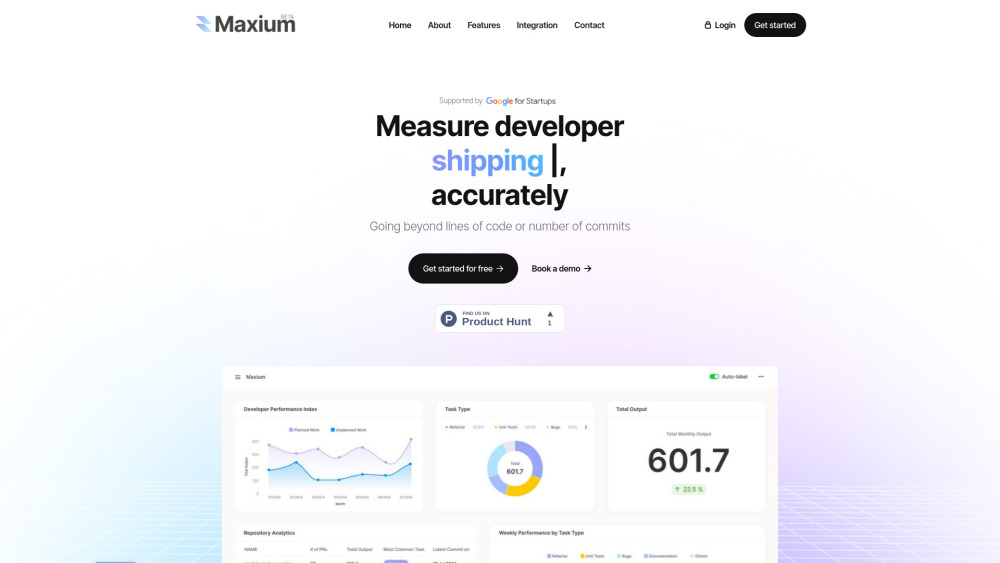

Delivers instant insights into the performance and efficiency of engineering teams. Discover how real-time data can enhance productivity and drive successful project outcomes.

Unlock the potential of your YouTube videos by converting them into captivating blog posts. This effective strategy not only enhances your content's reach and engagement but also helps to diversify your audience. By transforming your video content into written format, you can improve SEO, attract more visitors to your blog, and create valuable resources that keep your audience coming back for more. Explore how to seamlessly turn your visual storytelling into compelling written narratives!

In today's digital world, a strong first impression is crucial. AI-generated professional headshots not only enhance your online presence but also convey professionalism and approachability. Utilizing advanced artificial intelligence technology, these headshots are crafted to meet the unique style and branding needs of individuals and businesses alike. Discover how embracing AI-generated imagery can transform your personal and professional brand, setting you apart in a competitive landscape.

Find AI tools in YBX

Related Articles

Refresh Articles