Facebook's Three-Step Strategy for Enhancing AI Inclusivity

Most people like

Discover PlayHT, an innovative AI Voice Generator platform that offers an impressive selection of over 600 voices in numerous languages. Explore the possibilities of transforming text into lifelike speech effortlessly.

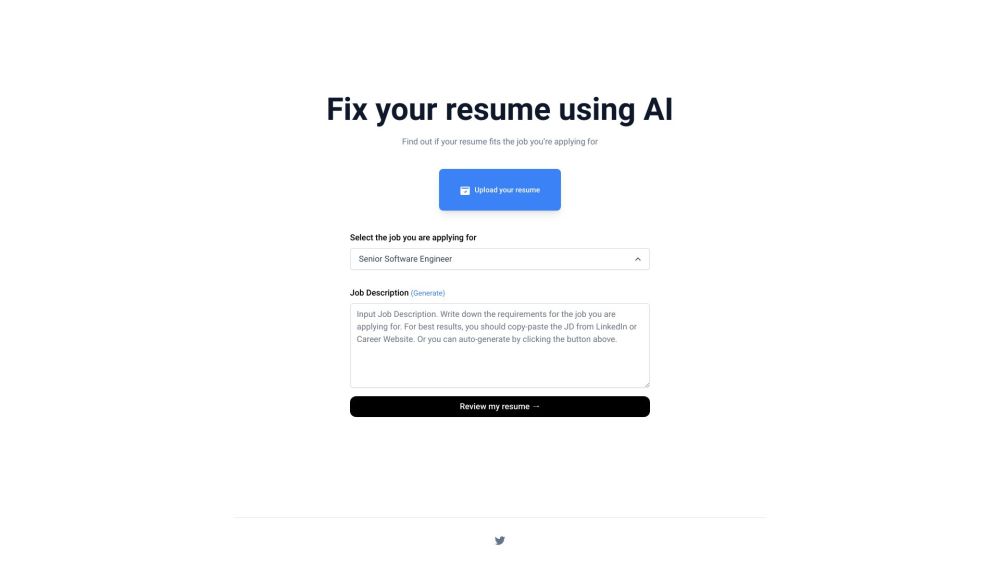

Enhance your job search with our AI-powered online platform that provides comprehensive resume reviews, helping you refine and optimize your resume for better opportunities.

In today's digital landscape, the ability to create text that resonates with readers is essential. Converting AI-generated content into human-like narratives not only enhances comprehension but also fosters a genuine connection with the audience. This guide explores effective strategies for achieving this transformation, ensuring that AI's potential is harnessed while maintaining the warmth and relatability synonymous with human writing. Whether you're a content creator, marketer, or business professional, mastering this skill can elevate your messaging. Let's dive deeper into the techniques that make AI text feel authentic and engaging.

Find AI tools in YBX