FTC Initiates Investigation of ChatGPT Developer OpenAI

Most people like

In today’s digital world, the ability to transform images into text using advanced AI technology is becoming increasingly vital. An AI image-to-text conversion tool allows users to effortlessly extract text from images, streamlining workflows, saving time, and enhancing productivity. Whether for academic research, business documentation, or personal projects, this innovative solution makes it easy to convert printed or handwritten text into editable digital formats. Discover how AI-driven tools are revolutionizing the way we handle information and making image-to-text conversion accessible to everyone.

Discover an innovative AI-powered job board designed specifically for careers in IT, Science, and Engineering. This platform seamlessly connects talented professionals with top employers, streamlining the job search process and maximizing opportunities in these high-demand fields. Whether you're seeking your next career move or looking to hire the best talent, our intelligent algorithms ensure you find the perfect match faster than ever.

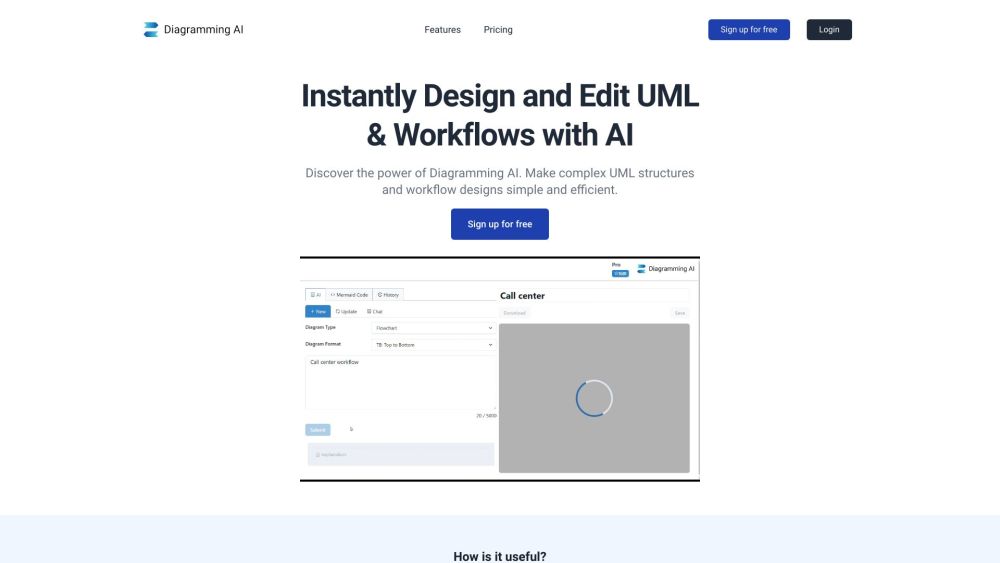

Effortlessly simplify your UML and workflow designs using the cutting-edge Diagramming AI platform powered by AI technology.

Unlock the power of our free AI math solver, designed to help you tackle your math homework with ease. Whether you're struggling with algebra, calculus, or geometry, our advanced tool is here to provide clear solutions and step-by-step explanations, ensuring that you not only complete your assignments but also enhance your understanding of math concepts. Get started today and transform your learning experience!

Find AI tools in YBX