Google and OpenAI to Collaborate with UK Government by Sharing AI Models

Most people like

In an age where data privacy is paramount, finding a reliable analytics solution without compromising user information is crucial. This guide explores the top privacy-first alternatives to Google Analytics that prioritize user confidentiality while delivering insightful data analytics. Join us as we uncover these innovative tools designed to respect privacy and enhance website performance.

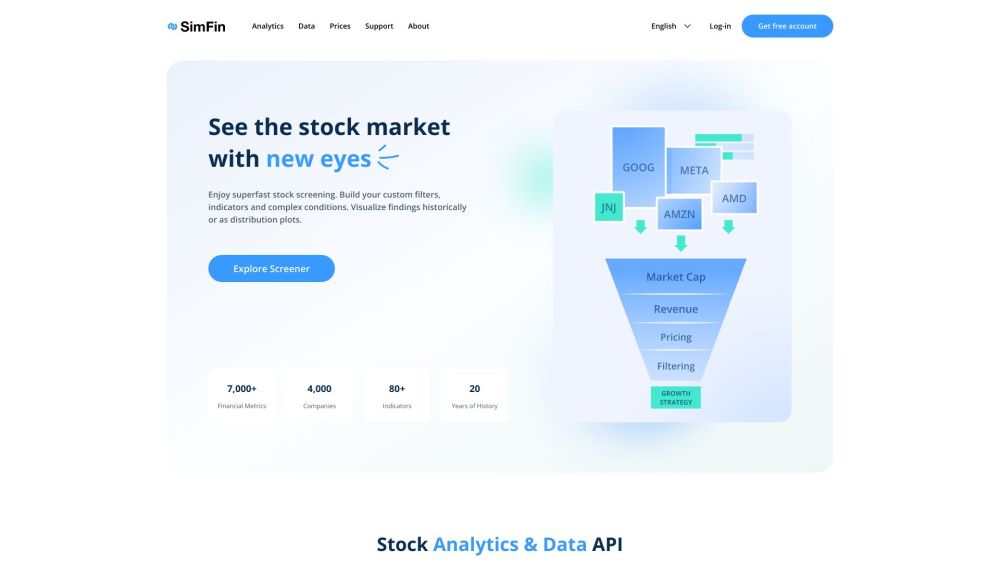

SimFin is a reliable platform that offers in-depth stock analysis and essential financial insights, making it an invaluable resource for investors and analysts alike.

In today's fast-paced digital landscape, effective communication is essential. The unrestricted chat tool empowers users to experience real-time interactions without limitations. Whether you're collaborating with a team, engaging with clients, or simply connecting with friends, this tool enhances communication efficiency and fosters stronger relationships. Explore how an unrestricted chat tool can transform your conversations and bring people closer together, breaking down barriers to effective dialogue.

Find AI tools in YBX