Google Launches Gemma 2 Series: Discover the Two Lightweight Model Options - 9B and 27B

Most people like

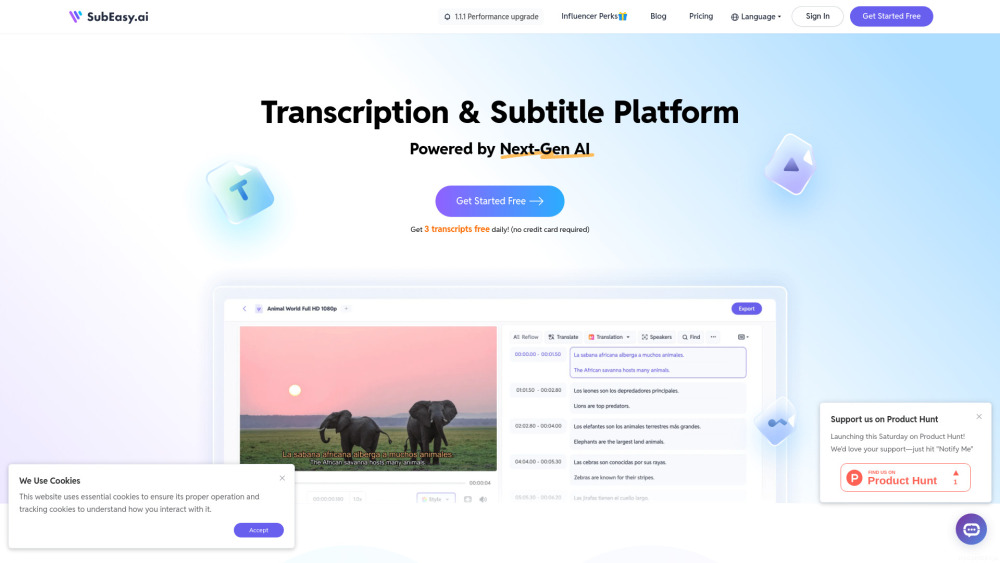

Transform your audio and video content with our advanced AI transcription and translation services. Whether you need accurate transcriptions for clarity or translations to reach a broader audience, our cutting-edge technology ensures swift and reliable results. Elevate your media with seamless accessibility and understanding today!

Unleash your creativity with PromptPal, where you can explore and share a diverse collection of AI prompts. Join our community to inspire your imagination and elevate your creative projects.

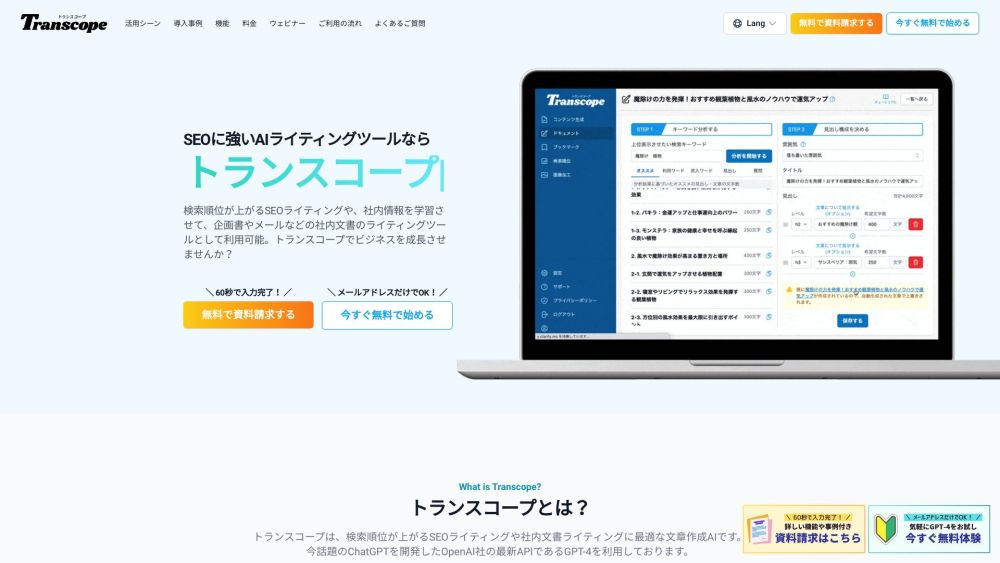

Transcope is a cutting-edge AI writing tool powered by GPT-4, designed to help you create high-quality, SEO-optimized content effortlessly. Unlock the potential of advanced writing technology to elevate your online presence with Transcope.

Find AI tools in YBX

Related Articles

Refresh Articles