Google Launches New Tool to Protect Your Data from AI Surveillance

Most people like

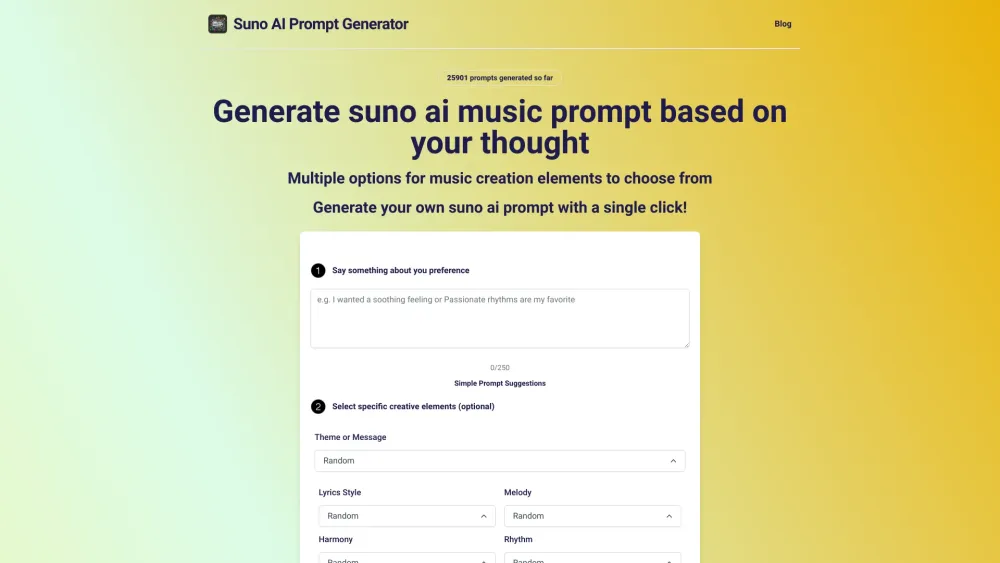

Are you looking to ignite your musical inspiration? Discover the power of transforming your ideas into captivating music prompts. By tapping into your thoughts, you can effortlessly create unique themes and melodies that resonate with you. Whether you’re an aspiring musician or a seasoned composer, this tool will help you channel your imagination into beautiful music. Start creating today!

Dover is an innovative platform designed to streamline recruiting processes, effortlessly linking businesses with exceptional talent.

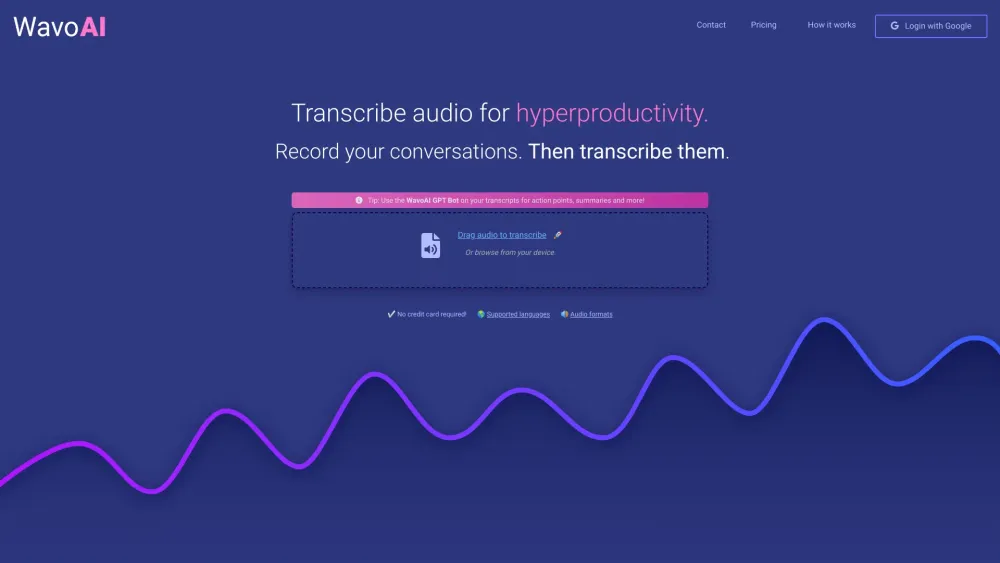

Unlock your potential and streamline your workflow with AI-driven audio transcription technology. By converting spoken words into written text, this innovative tool not only saves you time but also improves efficiency in note-taking, meeting documentation, and content creation. Discover how AI audio transcription can transform your productivity and revolutionize the way you manage information.

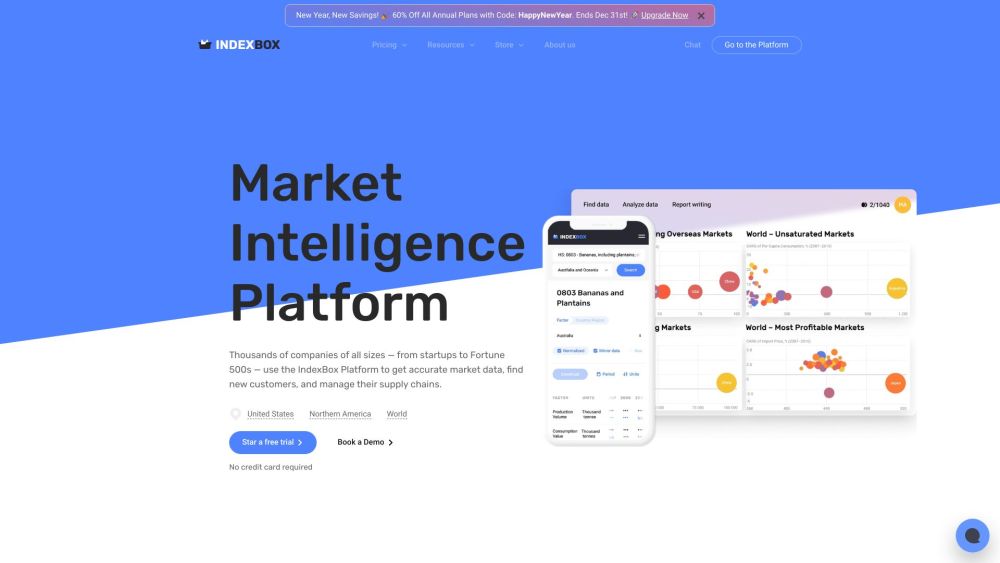

Introducing our AI-driven market intelligence platform, designed to empower businesses with actionable insights and data-driven strategies. This innovative tool harnesses advanced analytics and artificial intelligence to provide a comprehensive understanding of market trends, consumer behavior, and competitive landscapes. Whether you're a seasoned entrepreneur or a growing startup, our platform equips you with the necessary resources to make informed decisions and drive success in today's dynamic marketplace. Experience the future of business intelligence with our cutting-edge solution.

Find AI tools in YBX

Related Articles

Refresh Articles