Google Launches Gemini 2.0 Pro and Flash Thinking AI Models Amid Rising Competition from DeepSeek

Most people like

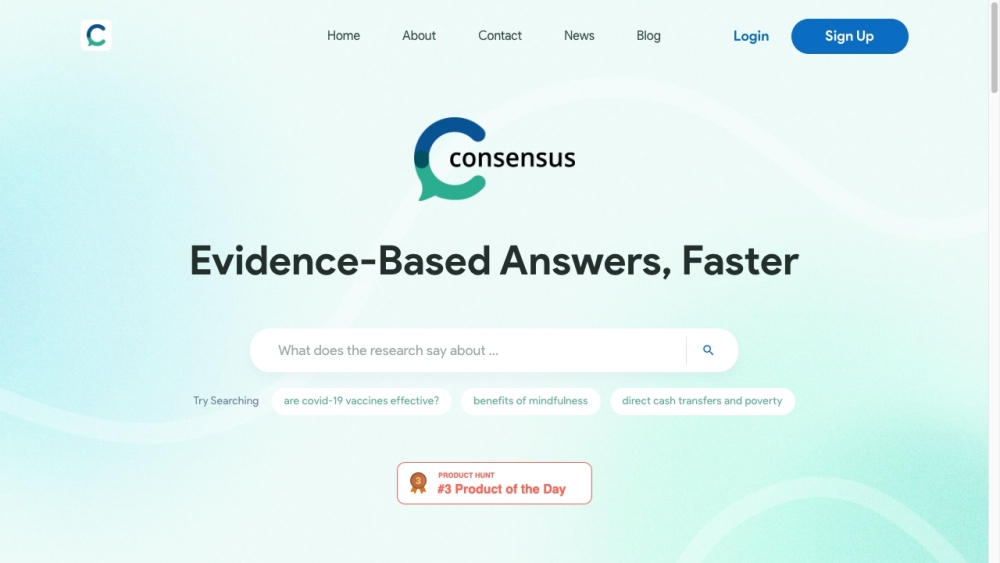

Consensus harnesses the power of AI to uncover valuable insights within research papers, streamlining the process of academic exploration and enhancing data-driven decision-making.

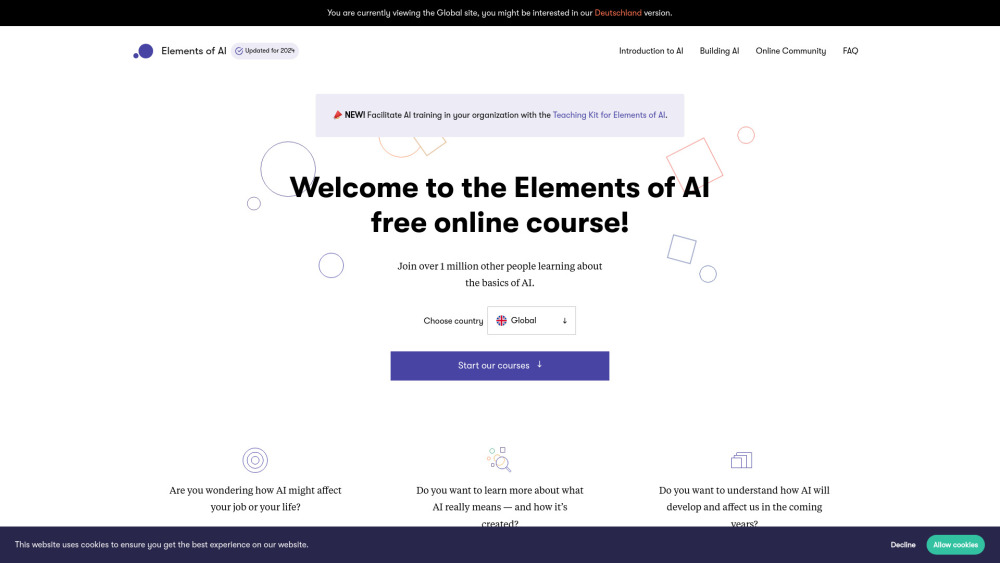

Unlocking the World of Free Online AI Education for Beginners

Explore the vast realm of artificial intelligence with our comprehensive guide to free online resources designed specifically for non-experts. Whether you're a curious newcomer or someone looking to enhance your skill set, these accessible learning tools will empower you to understand AI concepts and applications without the need for a technical background. Join a growing community of learners and take your first step into the exciting future of AI.

In today’s digital landscape, artificial intelligence (AI) is transforming business operations, but it also poses significant security challenges. Organizations must prioritize secure AI solutions to protect their sensitive data and maintain compliance while harnessing the power of AI for innovation and efficiency. This guide delves into strategies and best practices for implementing secure AI in enterprises, ensuring robust data protection and fortified resilience against evolving cyber threats. Discover how integrating secure AI can empower your organization to thrive while safeguarding against potential risks.

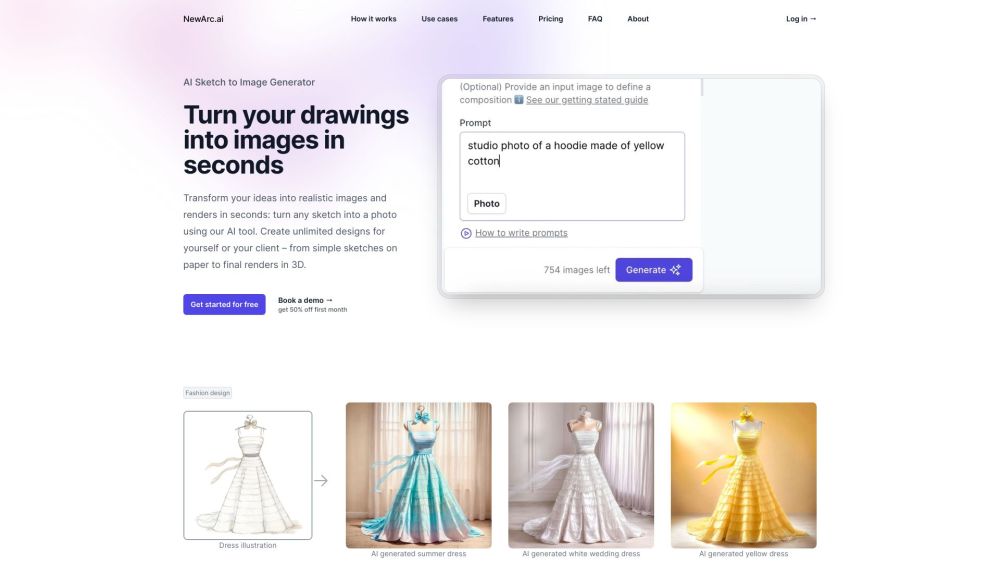

Find AI tools in YBX