Grok 3, Xai's Next-Generation Primary AI Model, Is Not yet Available

Most people like

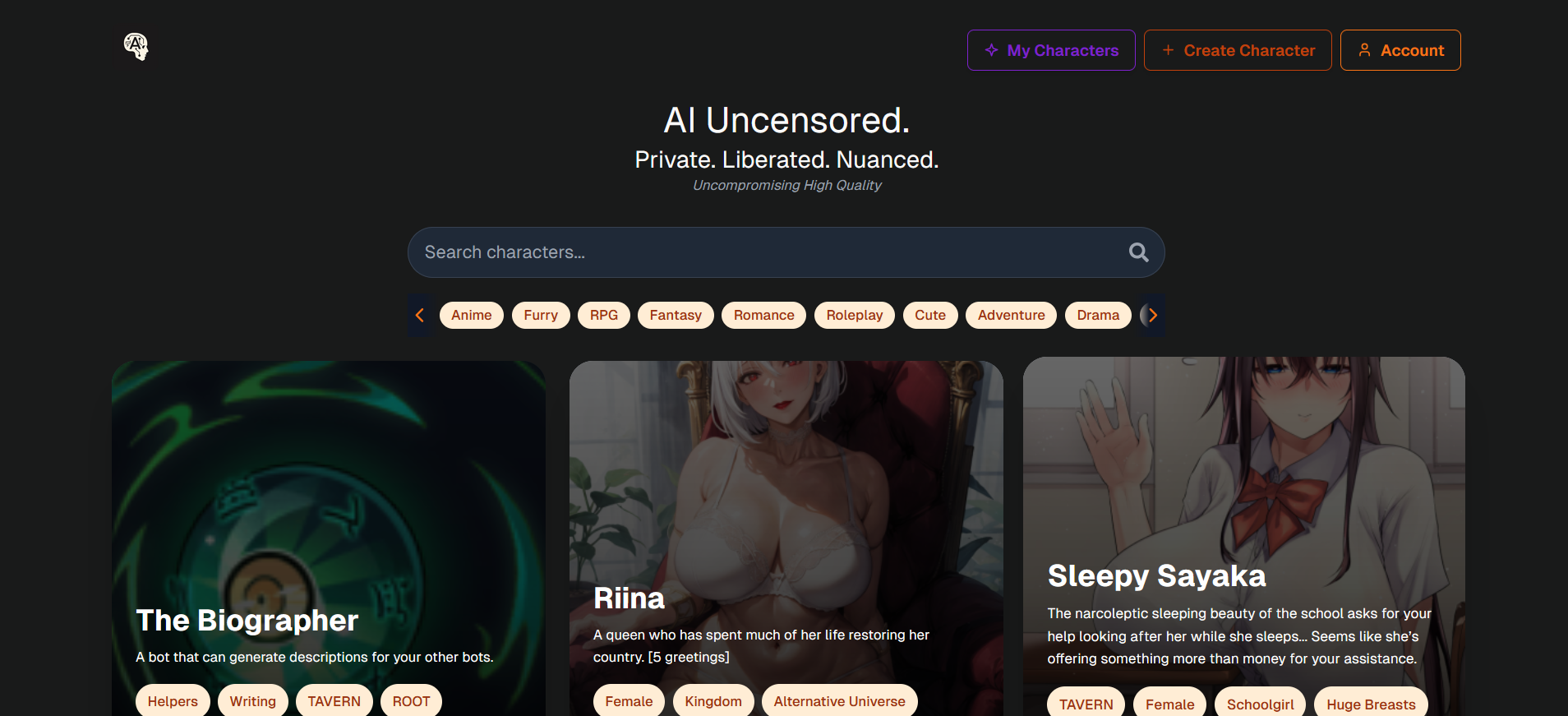

Unlimited AI messaging with thousands of uncensored AI characters. For creative and private conversations.

Unlock the potential of your eCommerce business with AdCopy.ai, an innovative AI-powered platform that helps you craft compelling ad copy effortlessly. Enhance your marketing strategy and drive conversions with expertly generated content tailored to your audience.

Introducing a cutting-edge language model designed for exceptional text generation. This powerful tool harnesses advanced AI technology to produce high-quality written content tailored to your needs. Whether you're crafting engaging articles, compelling marketing copy, or insightful reports, our state-of-the-art model delivers results that captivate and inform. Unlock the potential of AI-driven writing today!

Transform any text into engaging quizzes with our AI-powered quiz generator. Effortlessly create interactive assessments that enhance learning and retention, making education more accessible and enjoyable. Perfect for educators, students, or anyone looking to test knowledge, our tool streamlines the quiz-making process and boosts comprehension. Dive into the future of learning with our innovative quiz generator!

Find AI tools in YBX