Here’s How OpenAI Plans to Assess the Power and Capabilities of Its AI Systems

Most people like

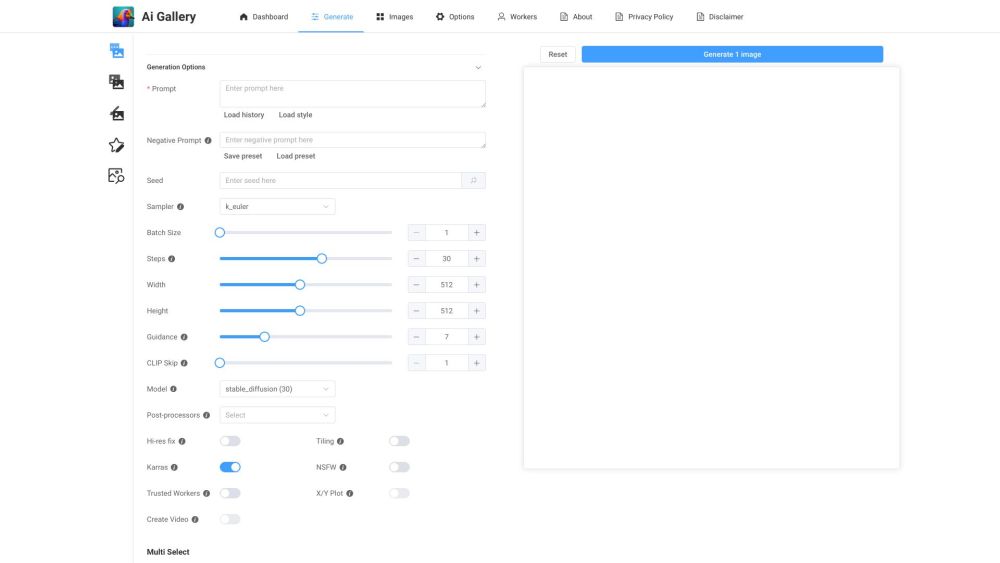

Unleash your creativity with AI Gallery’s rapid AI art generator, designed to help you craft breathtaking artworks in no time.

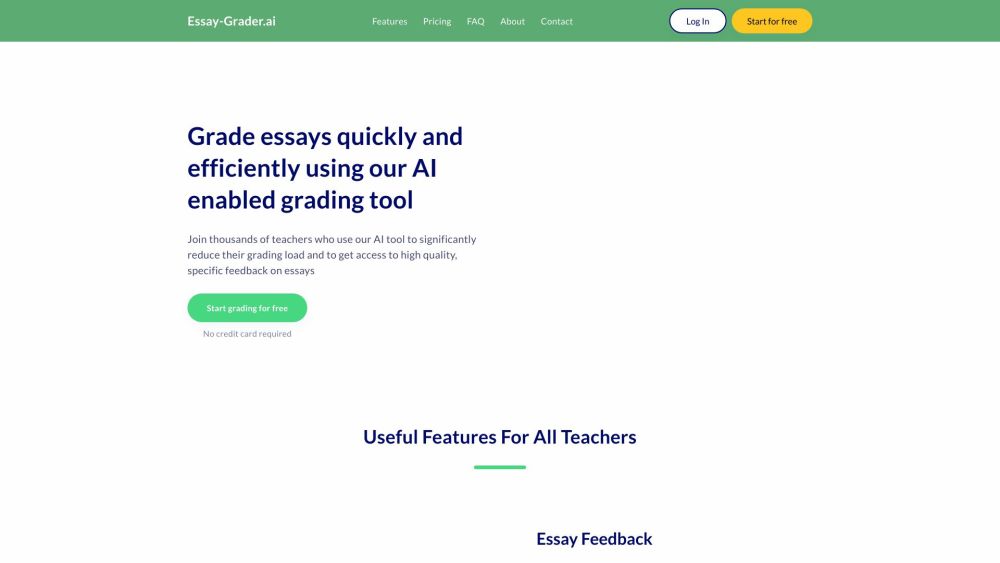

Enhance Efficiency in Grading with AI Solutions

In today’s fast-paced educational landscape, leveraging AI technology can significantly streamline the grading process. By automating tedious tasks and ensuring consistent evaluation, AI tools not only save educators valuable time but also improve the accuracy of assessments. Discover how integrating AI into your grading system can transform the educational experience for both teachers and students alike.

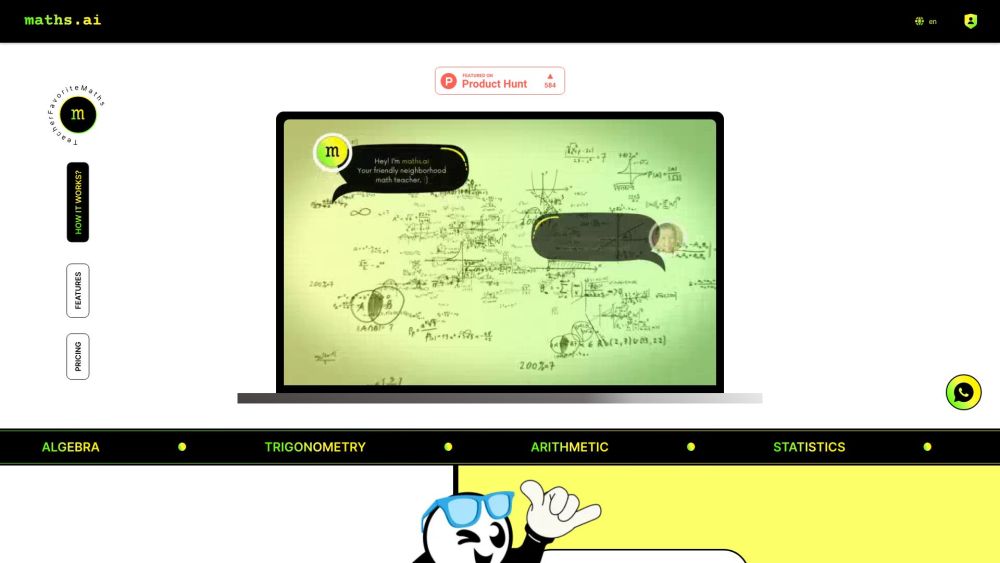

Meet your friendly AI math tutor, designed to make learning math engaging and accessible! With our intuitive platform, you'll receive personalized support to tackle everything from basic arithmetic to advanced calculus. Join countless learners who are boosting their confidence and skills with our interactive lessons and instant feedback. Let’s unlock your math potential together!

Revolutionize your productivity with our AI tool designed to prioritize workplace messages and tasks. Effortlessly manage your workload by ensuring that the most important tasks and communications are front and center, allowing you to stay focused and efficient. Discover the benefits of using advanced algorithms to streamline your day-to-day operations and enhance teamwork in your professional environment.

Find AI tools in YBX